Indexes

Indexes preprocess your files preparing them for search and store them in a document store of your choice. You can reuse indexes across your query pipelines. Learn how it works.

What's an Index

An index is a data structure that provides your pipelines with fast, efficient access to your large-scale datasets. Think of it as a book index that helps you find specific topics without reading the entire book. It's crucial for fast, efficient search, making it possible to handle large-scale datasets.

How Indexes Work

Indexes work on the files uploaded to Haystack Enterprise Platform. Indexes use configurable components connected together with each one performing a single task on your files, such as conversion, cleaning, or splitting. They clean the files and chunk them into smaller passages called documents. Then, they write the cleaned documents in a database called document store from which the pipeline retrieves them at query time.

Indexes are optional, meaning that if you have a query pipeline that queries files in an existing database, like Snowflake, or uses the model's knowledge, you don't need an index.

Indexes are specific to a workspace. Deleting a workspace deletes all the indexes in this workspace.

Document Stores

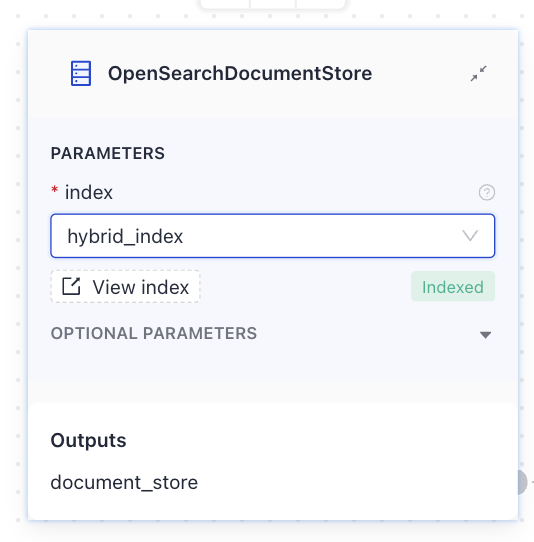

Document store is where your index writes the data and where your pipelines can then access them. When setting up a pipeline, you can connect it to a document store and choose an index directly from the Document Store component card.

For details, see Document Stores.

Indexing

The files in Haystack Enterprise Platform are indexed once when an index is enabled. New files uploaded after you enable an index are indexed individually and added to the enabled index.

File Updates

You can upload, delete, and modify files if your index is enabled. You can also update the metadata of individual files.

Changes apply only to the specific files you modify. For example, when you upload a file, only that file is indexed. Haystack Platform doesn't reindex all existing files.

Metadata Updates

When you update a file's metadata, Haystack Enterprise Platform does not reindex the file content. Instead, it follows these steps:

- It updates the metadata in the workspace index in OpenSearch.

- It notifies the index, which then updates any documents derived from the file.

Any changes to the files are queued and delayed to ensure the correct order.

Example

Let's say you perform these operations on your files:

- Upload file1.txt.

- Upload file2.txt.

- Delete file1.txt.

- Update metadata of file2.txt.

- Upload file3.txt.

Haystack Enterprise Platform processes it in the following order:

- Index file1.txt.

- Index file2.txt.

- Delete file1.txt.

- Update documents created from file2.txt with the new metadata.

- Index file3.txt

Only after the steps are completed and the number of pending tasks is 0, the changes are live and you can view them in Haystack Platform.

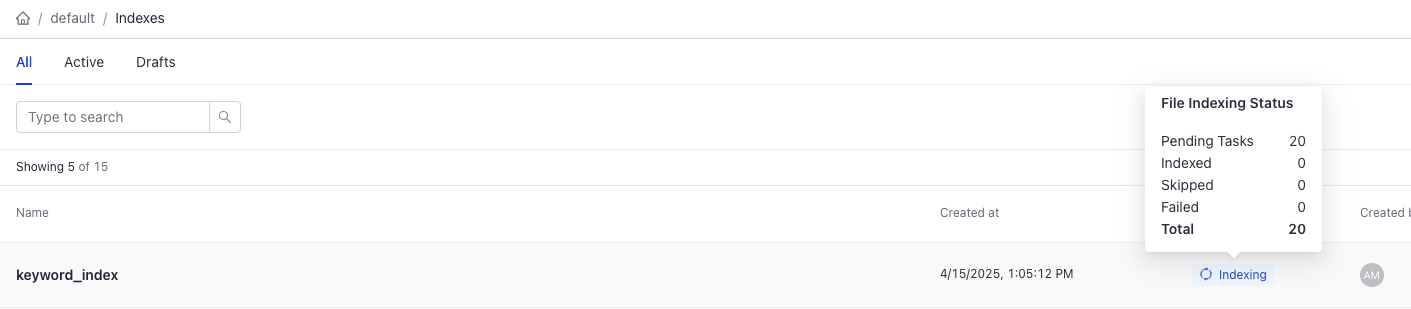

You can check the task status on the Indexes page. Hover your mouse over the status label:

You can also use the Get Index By Name endpoint to check the task status.

Core and Integration Indexes

Core indexes write files into the OpenSearchDocumentStore, which is the core document store of Haystack Enterprise Platform. This means Haystack Platform manages its infrastructure, authorization, and has access to the indexing information.

Integration indexes use one of the integrated document stores, such as Pinecone, Weaviate, or others. For these document stores, you must manage the infrastructure yourself. Haystack Enterprise Platform also doesn't have access to the indexing information. For details, see Document Stores.

Indexes and Pipelines

To run searches on files in Haystack Enterprise Platform, a pipeline must be connected to an index. You do this by adding a document store to a query pipeline and choosing the index you want this document store to use.

Multiple pipelines can use a single index; one pipeline can use multiple indexes. An index must be enabled to be used in a query pipeline.

Building Indexes

Haystack Enterprise Platform provides a set of curated and maintained index templates for various file types. You can use one of the templates to build your index or you can start from scratch.

Indexes are built the same way as query pipelines. You simply drag components to the canvas in Pipeline Builder and then connect them so that the output of the preceding component matches the input type of the next component.

For details, check How do pipelines work in Pipelines.

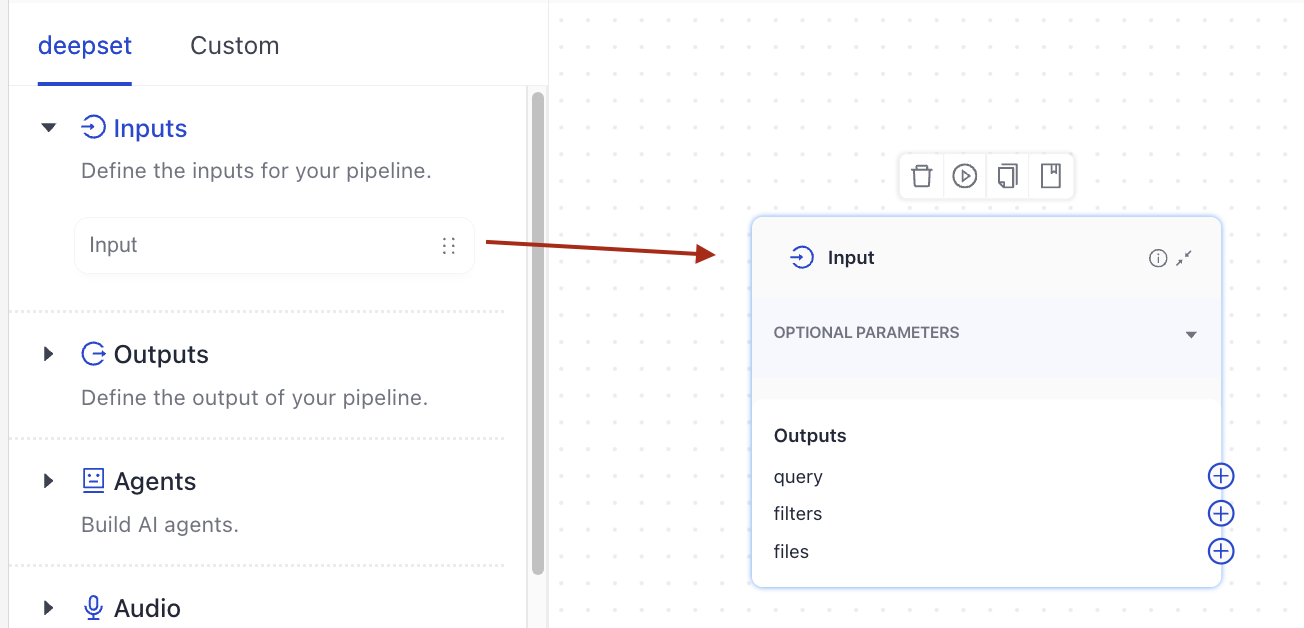

Inputs

Indexes always start with files as input. When in Pipeline Builder, add the Input component at the beginning of an index.

Outputs

Indexes return a list of Document objects as output, usually written into the document store by the DocumentWriter component, which is often the last component in an index.

Body

The index body defines what happens with the files you uploaded to Haystack Platform. It's up to you how you want to process your files. For hints and best practices, see PreProcessing Data with Pipeline Components.

Enabling Indexes

To start indexing, you enable an index from the Indexes page. An enabled index is in view-only mode, so you can't edit it. To make changes, you disable the index first. However, you can't disable an index that's used by a deployed pipeline. In this case, you can duplicate the index and update the copy.

To deploy a query pipeline, you must enable all indexes used by this pipeline.

Using Indexes in Query Pipelines

Indexes are linked to a document store, where documents are written and stored. Pipelines that work with your data include Retrievers, which fetch data from the document store. When setting up a Retriever, you need to connect it to a document store. After that, you can select an index from the document store card. This index is the one your query pipeline will use to search and retrieve data from the document store.

To use multiple indexes in a single query pipeline, create a separate document store for each index. Then, assign a retriever to each document store, since a retriever can only be connected to one document store at a time.

The Indexes Page

The Indexes page lists all indexes that exist in a workspace and their status. There are two tabs on the page:

- Active, for enabled indexes

- Drafts, for indexes that were saved but not yet enabled

The Index Details Page

Click an index name to open the Index Details Page where you can check:

- The index ID

- The status of files this index processes

- Details of pipelines connected to this index

- Index logs

- The index body

GPU Acceleration

Indexes use CPU by default. Turn on GPU support on the Settings tab when your index includes components that run better on a GPU, such as DoclingConverter, SentenceTransformersDocumentEmbedder, or custom components that use AI models.

When GPU support is on, the index checks whether a component needs a GPU and assigns one automatically. GPUs are only used when required and are not reserved for the entire index run.

If GPU support is off and your index includes components that rely on a GPU, those components run on the CPU instead. This can slow down processing and may cause timeouts, especially for larger or more complex indexes.

For details on how to enable GPU acceleration, see Enable GPU Acceleration for Indexes.

Index Status

- Not indexed: The pipeline is being deployed, but the files have not yet been indexed

- Indexing: Your files are being indexed. You can see how many files have already been indexed if you hover your mouse over the Indexing label.

- Indexed: Your pipeline is deployed, all the files are indexed, and you can use your pipeline for search.

- Partially indexed: At least one of the files wasn't indexed. This may be a problem with your file or a component in the pipeline. You can still run a search if at least some files were indexed. Check the index logs for details.

Was this page helpful?