PreProcessing Data with Pipeline Components

Learn about optimal ways to prepare your data using pipeline components available in Haystack Enterprise Platform. If you need tips and guidelines, you'll find them here.

Indexes for Preprocessing

An index converts your files to documents, preprocesses them, and finally stores them in a document store. The query pipeline can then use the documents from the document store to resolve queries. For details, see Indexes.

Haystack Enterprise Platform offers preprocessing components that you can add to your index. When you enable the index, your files are automatically converted, split, and cleaned. Templates available in Haystack Platform include indexes that preprocess TXT, PDF, MD, DOCX, PPTX, XLSX, XML, CSV, HTML, and JSON files out of the box. For other formats, you may need to preprocess outside of Haystack Platform.

Integrations for Processing Data

https://unstructured.io/

You can use unstructured.io through https://unstructured.io/FileConverter to preprocess your files. To learn how to do this, see Use https://unstructured.io/ to Process Documents.

Azure Document Intelligence

Use AzureOCRDocumentConverter to take advantage of Azure Document Intelligence preprocessing services. For details, see Use Azure Document Intelligence.

How to Prepare Your Files

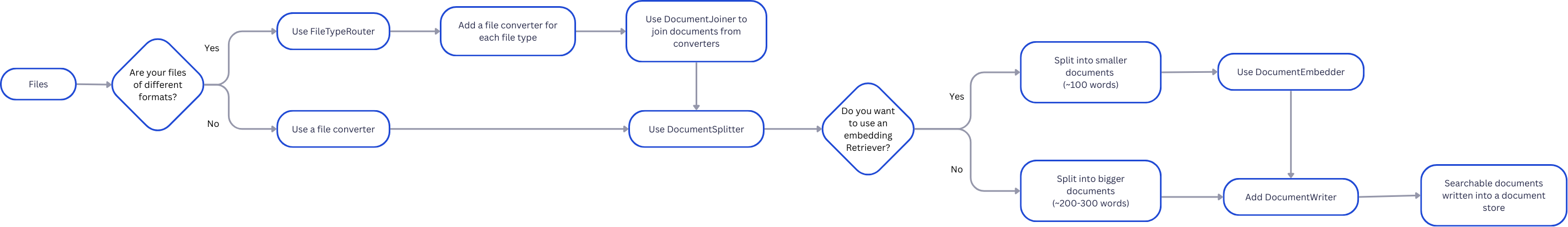

Here's an outline of how to plan file preprocessing:

Your files determine which components to use in the index:

- If all your files are of one type, use a file converter appropriate for handling this type as the first component in your index. For supported converters, see Converters.

- If you have multiple file types, use

FileTypeRouteras the first component in your index and connect it to the converters for all file types you need. FileTypeRouter classifies your files based on their extension and sends them to the converter that can best handle them.

In this case, you'll also needDocumentJoinerto join the output of multiple components and send it as a single output to a preprocessor, such asDocumentSplitter.

The converter's task is to convert your files into documents. However, the documents you obtain this way may not be of the optimal length for the retriever you want to use and may still need cleaning up. PreProcessors are the components that handle the cleaning and splitting of documents. They remove headers and footers, which is useful for retaining the flow of sentences across pages; they delete empty lines and split your documents into smaller chunks.

Smaller documents speed up your pipeline. They're also optimal for vector retrievers, which often can't handle longer text passages. We recommend 100-word splits for vector retrievers. Keyword retrievers can work on slightly longer documents of around 200-300 words.

Use these suggestions as a starting point for your index. You may need to experiment with your settings to reach the optimal values for your use case.

Pipeline Components for Preprocessing

Components are very flexible, and you can use them in all types of pipelines, but several are typically used for indexing. Have a look at this table to help you choose the right components:

| Preprocessing Step | Component That Does It |

|---|---|

| Sort files by type and route them to appropriate converters for the file type. | FileTypeRouter |

| Convert a file to a document object. You can choose a converter that matches your file types. For file types for which a converter is unavailable, we recommend preprocessing your files outside of Haystack Enterprise Platform. | Converters) |

| Validate text language based on the ISO 639-1 format. | Converters |

| Remove numeric rows from tables. | Converters |

| Add metadata to the returned document. | Converters |

| Split long documents into smaller ones. | DocumentSplitter |

| Get rid of headers, footers, whitespace, and empty lines. | DocumentCleaner |

| Extract text and tables from PDF, JPEG, PNG, MBP, and TIFF files. | Converters |

| Extract content using https://unstructured.io/ API. | https://unstructured.io/FileConverter |

| Extract entities from documents in the document store and add them to the documents' metadata. | NamedEntityExtractor |

| Calculate embeddings for documents. | DocumentEmbedders |

| Write cleaned and split documents into the document store. | DocumentWriter |

Was this page helpful?