Pipeline Components Overview

Components are the fundamental building blocks of your pipelines, dictating the flow of data. Each component performs a designated task on the data and then forwards the results to subsequent components.

How Components Work

Components receive predefined inputs within the pipeline, execute a specific function, and produce outputs. For example, a component may take files, convert them into embeddings, and pass these embeddings on to the next connected component. You have the flexibility to mix, match, and interchange components in your pipelines.

Components are often powered by language models, like LLMs or transformer models, to perform their tasks.

Connecting Components: Inputs and Outputs

Components accept specific inputs and produce defined outputs. To connect components in a pipeline, you must know the types of inputs and outputs they accept. The output type from one component must be compatible with the input type of the subsequent one. For example, to connect a retriever and a ranker in a pipeline, you must know that the retriever outputs List[Document] and the ranker accepts List[Document] as input.

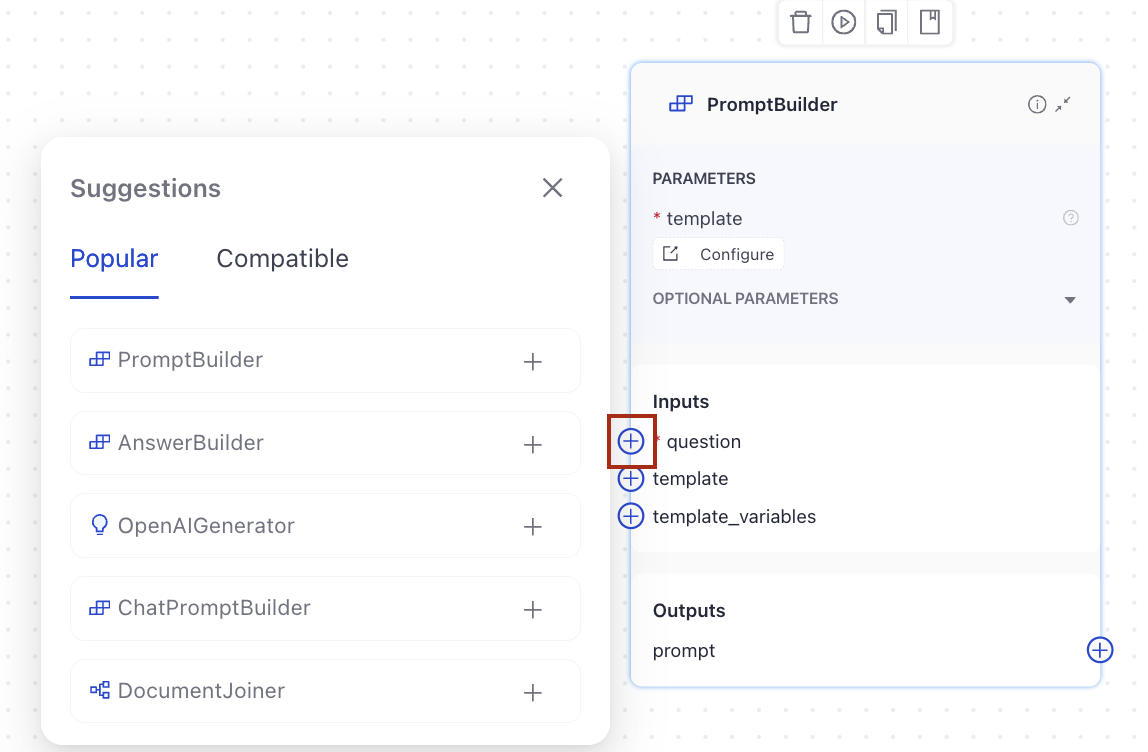

When creating a pipeline in Pipeline Builder, you can click the connector to see the list of popular and compatible connections:

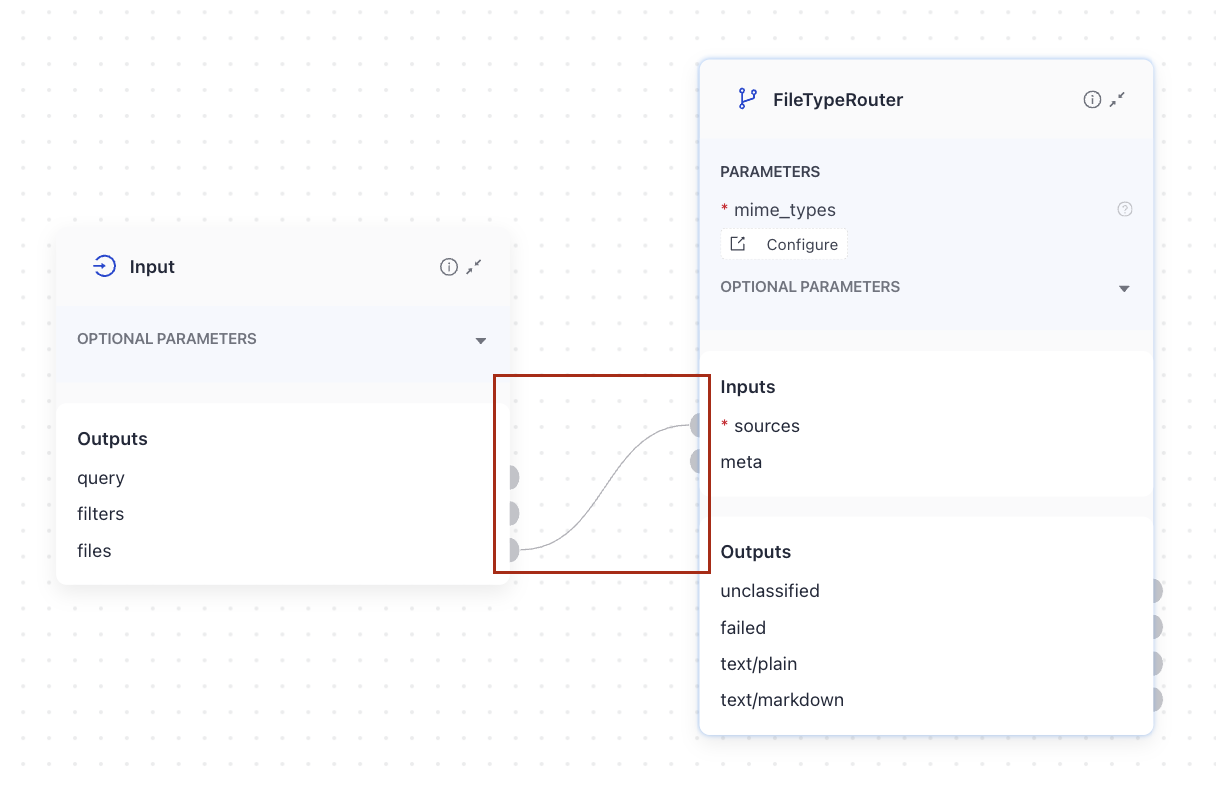

To connect components, click a connection point on one component, then on the other, and you'll see a line formed from one component's output to the other component's input.

When working in YAML, you indicate their output and input names, for example:

...

connections:

- sender: retriever.documents

receiver: ranker.documents

..

The output of the sender component can have a different name than the input of the receiver component as long as their types match, as in this example:

...

connections:

- sender: file_type_router.text/plain

receiver: text_converter.sources

..

A component must always receive all of its required inputs. Typically, a component's input can connect to only one output. But, if a component's input type is variadic, it can receive multiple outputs.

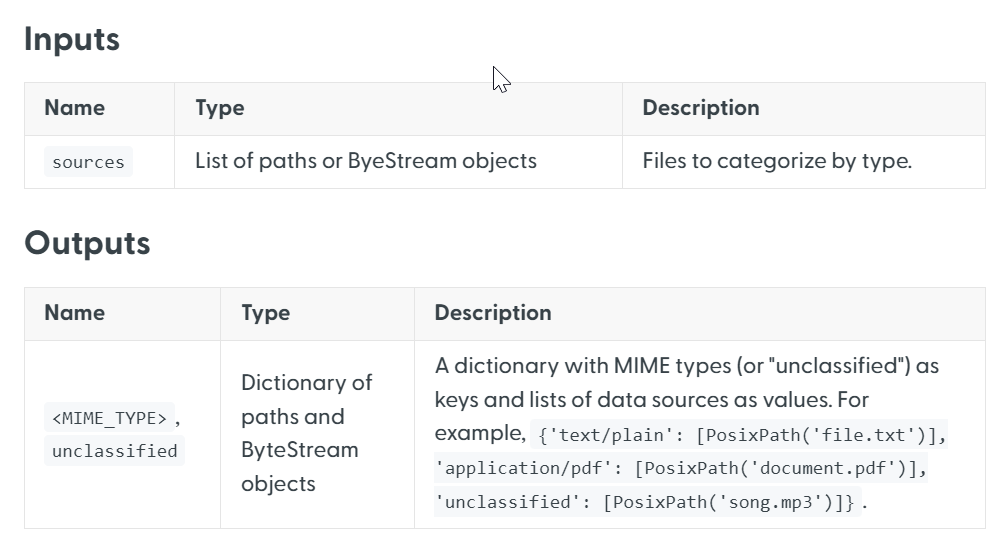

For detailed specifications on what inputs and outputs each component requires and generates, refer to the individual documentation pages for each component. The component's inputs and outputs are listed at the top of the page:

Configuring Components

In Pipeline Builder

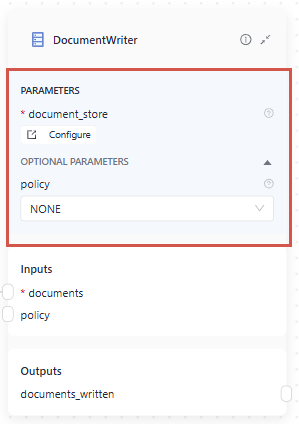

In Pipeline Builder, you simply drag components onto the canvas from the component library. You can then customize the component's parameters on the component card:

You can change the component name by clicking it and typing in a new name. And that's it!

In YAML

When adding a component to the pipeline YAML configuration, you must add the following information:

-

Component name: This is a custom name you give to a component. You then use this name when configuring the component's connections.

-

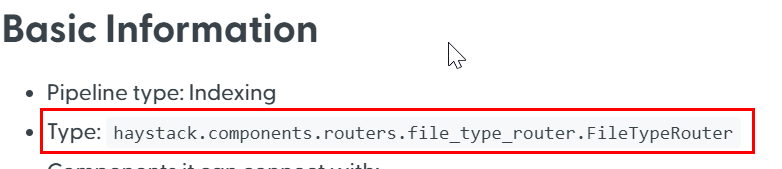

Type: Each component has a type you must add to the YAML. You can check the component's type on the component's documentation page:

-

Init_parameters: These are the configuration parameters for the component. You can check them on the component's documentation page or in the API reference. To leave the default values, add an empty dictionary:

init_parameters: {}.

This is an example of how you can configure a component in the YAML:

components:

my_component: # this is a custom name

type: haystack.components.routers.file_type_router.FileTypeRouter # this is the component type you can find in component's documentation

init_parameters: {} # this uses the default parameter values

another_component:

type: haystack.components.joiners.document_joiner.DocumentJoiner

init_parameters:

join_mode: concatenate

sort_by_score: false

Connecting Components

As components can have multiple outputs, you must explicitly indicate the component's output you want to send to another component. You configure how components connect in the connections section of the YAML, specifying the custom names of the components and the names of their output and input you want to connect, as in this example:

connections:

# we're connecting the text/plain output of the sender to the sources input of the receiver component

- sender: my_component.text/plain

receiver: text_converter.sources

If a component accepts outputs from multiple components, you explicitly define each connection, as in this example:

connections:

- sender: embedding_retriever.documents

receiver: joiner.documents

- sender: bm25_retriever.documents.

receiver: joiner.documents

Component Groups

Components are grouped by their function, for example, embedders, generators, and so on. Read about what the groups do to help you find appropriate components.

By default, components run on CPU. You can turn on GPU acceleration in the pipeline or index settings to speed up processing. For details, see GPU Acceleration.

Embedders

Embedders convert text strings or Document objects into vector representations (embeddings). They use pre-trained models to do that.

Embeddings are vector representations of text that capture the context and meaning of words, rather than just relying on keywords. In LLM apps, they speed up processing and improve the model's ability to understand complex linguistic nuances and semantic understanding of text.

Text and Document Embedders

There are two types of embedders: text and document. Text embedders work with text strings and are most often used at the beginning of query pipelines to convert query text into vectors and send it to a retriever. Document embedders embed Document objects and are most often used in indexing pipelines, after converters, and before DocumentWriter.

You must use the same embedding model for text and documents. This means that if you use CohereDocumentEmbedder in your indexing pipeline, you must then use CohereTextEmbedder with the same model in your query pipeline.

Generators

Generators let you use large language models (LLMs) in your applications. Each model provider has a dedicated Generator. There are also ChatGenerators designed for chat-based interactions. Learn when to use each type and how they differ.

Choosing a Generator

Each model provider or model-hosting platform supported by Haystack Platform has a dedicated Generator. Choose the one that works with the model provider you want to use. For example, to use the Claude model through Anthropic's API, choose AnthropicGenerator. To use models through Amazon Bedrock, choose AmazonBedrockGenerator.

For guidance on models, see Language Models in Haystack Enterprise Platform.

Generators and ChatGenerators

Most Generators have a corresponding ChatGenerator.

- Generators are designed for text-generation tasks, such as in a retrieval augmented generation (RAG) system, where the user asks a question and receives a one-time answer.

- ChatGenerators handle multi-turn conversations, maintaining context and consistency throughout the interaction. They also support tool calling, which allows the model to make calls to external tools or functions.

Key Differences

| Generators | ChatGenerators | |

|---|---|---|

| Input type | String (supports Jinja2 syntax) | List of ChatMessage objects |

| Output type | Text | ChatMessage |

| Best for | • Single-turn text generation • RAG-style Q&A | • Multi-turn chat scenarios • Maintaining context across interactions • Assuming a consistent role • Tool calls • Using various content types, such as text and images, in prompts |

| Tool calling | Not supported | Supported (accepts tools and functions as parameters) |

| Used with | PromptBuilder | ChatPromptBuilder |

When to Use Each

- Use a ChatGenerator if:

- Your application involves multi-turn conversations.

- The model needs to call external tools or functions.

- You want to use various content types in the prompt, for example images and text.

- Use a Generator if the model only needs to generate answers without maintaining conversation history.

Streaming refers to the process of generating responses in real time as the model processes input. Instead of waiting for the entire input to be processed before responding, the model generates an answer token by token, making the communication feel more fluid and immediate.

Streaming is particularly useful in applications where timely feedback is crucial, such as in live chat interfaces or conversational agents.

All RAG pipelines in deepset Cloud have streaming enabled by default.

ChatMessage

ChatMessage is a data class in Haystack used by ChatGenerators and ChatPromptBuilder. ChatPromptBuilder sends a list of ChatMessages to a ChatGenerator, which then also returns a list of ChatMessages.

Each message has a role (such as system, user, assistant, or tool) and associated content. The system message is used to set the overall tone and instructions for the conversation, for example: "You are a helpful assistant." The user message is the input from the user, usually a query, but it can also include documents to pass to the model. During the interaction, the LLM generates the next message in the conversation, usually as an assistant.

For details on the message format and its properties, see ChatMessage in Haystack documentation.

When using ChatPromptBuilder always provide your instructions using the template parameter in the following format:

- content:

- content_type: # replace this with the content type, supported content types are: text, tool_call, tool_call_result

# content may contain variables

role: role # supported roles are: user, system, assistant, tool`

In most cases, you'll write your instructions using the text content type and roles such as user, system, assistant, or tool. For instance, you might include the model's instructions as a system role ChatMessage, and the user's input as a user role ChatMessage. In the following example, we include retrieved documents within the user message together with the query:

- _content:

- text: |

You are a helpful assistant answering the user's questions.

If the answer is not in the documents, rely on the web_search tool to find information.

Do not use your own knowledge.

_role: system

- _content:

- text: |

Question: {{ query }}

_role: user

If the LLM calls a tool, it outputs a message with the content type tool_call. You can use this content type to configure conditional pipeline paths. For example, you can create two routes with ConditionalRouter: one route for when the LLM makes a tool call, and another for when it doesn't.

Jinja2 Syntax in ChatPromptBuilders

You can pass ChatMessages as Jinja2 strings in the template parameter of ChatPromptBuilder. This makes it possible to create structured ChatMessages with mixed content types, such as images and text. It also makes it possible to test the prompt in Prompt Explorer.

Use the {% message %} tag to include the ChatMessage in the prompt. For example:

{% message role="system" %}

You are a helpful assistant answering the user's questions.

If the answer is not in the documents, rely on the web_search tool to find information.

Do not use your own knowledge.

{% endmessage %}

For details, see ChatPromptBuilder and Writing Prompts in Haystack Enterprise Platform.

Generators in a Pipeline

- Generators receive the prompt from

PromptBuilderand return a list of strings. They can easily connect to any component that accepts a list of strings as input. - ChatGenerators receive prompts from

ChatPromptBuilderin the form of a list ofChatMessageobjects or templatized Jinja2 strings and they return a list ofChatMessageobjects.- If you want a ChatGenerator's output to be the final pipeline output, you can either connect it directly to

AnswerBuilderor use anOutputAdapterto convert the ChatGenerator's output into a list of strings and then connect it toDeepsetAnswerBuilder.

- If you want a ChatGenerator's output to be the final pipeline output, you can either connect it directly to

For details, see Common Component Combinations.

Limitations

ChatGenerators work in Prompt Explorer only if you use the Jinja2 syntax in their template parameter. If you use ChatMessages, you experiment with prompts using the Configurations feature in the Playground and modify the ChatPromptBuilder's template parameter. For details, see Modify Pipeline Parameters at Query Time.

Haystack Components

Haystack is Haystack Platform's open source Python framework for building production-ready AI-based systems. Haystack Enterprise Platform is based on Haystack and uses its components, pipelines, and methods under the hood. To learn more about Haystack, see the Haystack website.

External Links

Haystack Enterprise Platform combines Haystack components with its own unique components. The Haystack Platform-specific components are fully documented on this website. For Haystack components, we provide direct links to the official Haystack documentation. To help you differentiate, Haystack components are marked with an arrow icon in the navigation. Clicking on these components will take you to the Haystack documentation page.

Using Haystack Components Documentation

In Haystack, you can run some components on their own. You can see examples of this in the Usage section of each component page. In Haystack Enterprise Platform, you can only use components in pipelines. To see usage examples, check the Usage>In a pipeline section.

You can also check the component's code in the Haystack repository. The path to the component is always available at the top of the component documentation page.

Was this page helpful?