AI Agent

AI Agents are intelligent systems that perform tasks autonomously by reasoning, making decisions, and interacting with external tools. At their core, they rely on LLMs to determine what actions to take. By working with tools like pipelines, or custom functions, Agents can handle tasks far more complex than what traditional chatbots or generative question-answering systems can manage. Agents are particularly useful when tasks require autonomous decision-making and interactions with multiple tools.

Haystack Platform Agent

Haystack Platform Agent is an AI-based system that reasons about actions and executes multi-step tasks autonomously using tools it has at its disposal. Unlike a RAG Chat pipeline, that just answers questions based on the data it has, an Agent can plan ahead, decide which tools it needs, and work through multi-step processes to accomplish a goal. This may sound complex, but an Agent is really an LLM calling tools and executing them in a loop until it reaches a defined exit condition.

Key Features

- Model-agnostic: Works with any LLM giving you full flexibility.

- Tool-friendly: Seamlessly uses external tools like pipelines, custom functions, and MCP servers.

- Stay in control: Set clear exit conditions so the Agent stops when and how you want.

- Context-aware: Remembers conversation history and tracks which tools it has used.

- Real-time output: Supports streaming responses.

- Async-ready: Handles asynchronous execution to fit into complex workflow.

- Observability: Connect traces like Langfuse or Weights & Biases Weave to monitor the Agent's behavior in detail.

Agent vs RAG Chat

Both an Agent and a RAG Chat pipeline are LLM-based systems that can act as assistants and answer questions in a chat format. But there are significant differences between the two.-

-

RAG Chat is designed for question answering with retrieval-augmented generation. It’s great when your goal is to ground responses in documents using a Retriever and Reader. It’s simple, fast, and optimized for knowledge-based chats.

-

Agent is built for more complex, dynamic workflows. It can use a variety of tools—like pipelines, custom code, and MCP servers—to decide how to answer. Agents can maintain context, make decisions, and chain multiple steps together.

Use RAG Chat when you need fast, document-based answers. Use Agent when your task involves reasoning, tool use, or multi-step logic.

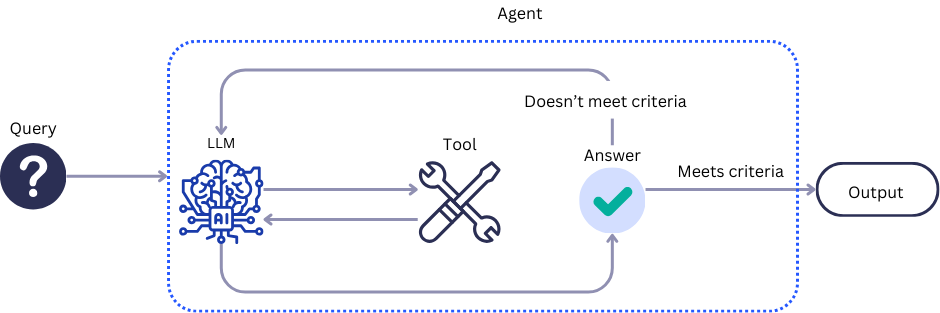

Agent's Workflow

The Agent follows this general workflow:

-

The Agent receives a user message and sends it to the LLM together with a list of tools.

-

The LLM decides whether to respond directly or call a tool.

- If the LLM returns an answer, the Agent stops and returns the result.

- If the LLM returns a tool call, the Agent runs the tool.

-

The Agent receives the tool call result and checks if it matches the exit condition.

- If it does, the Agent stops and returns all the messages.

- If it doesn't, the Agent sends the conversation history, including the tool output, back to the LLM and the loop starts over.

Building Agents in Haystack Enterprise Platform

The Agent is available as a pipeline component you can add to your pipelines. It lets you easily choose the underlying model, configure and edit tools on the Agent component card, and connect it to other components that send or receive data. The Agent also has memory that persists across runs, allowing it to track conversation history and the tools it has used.

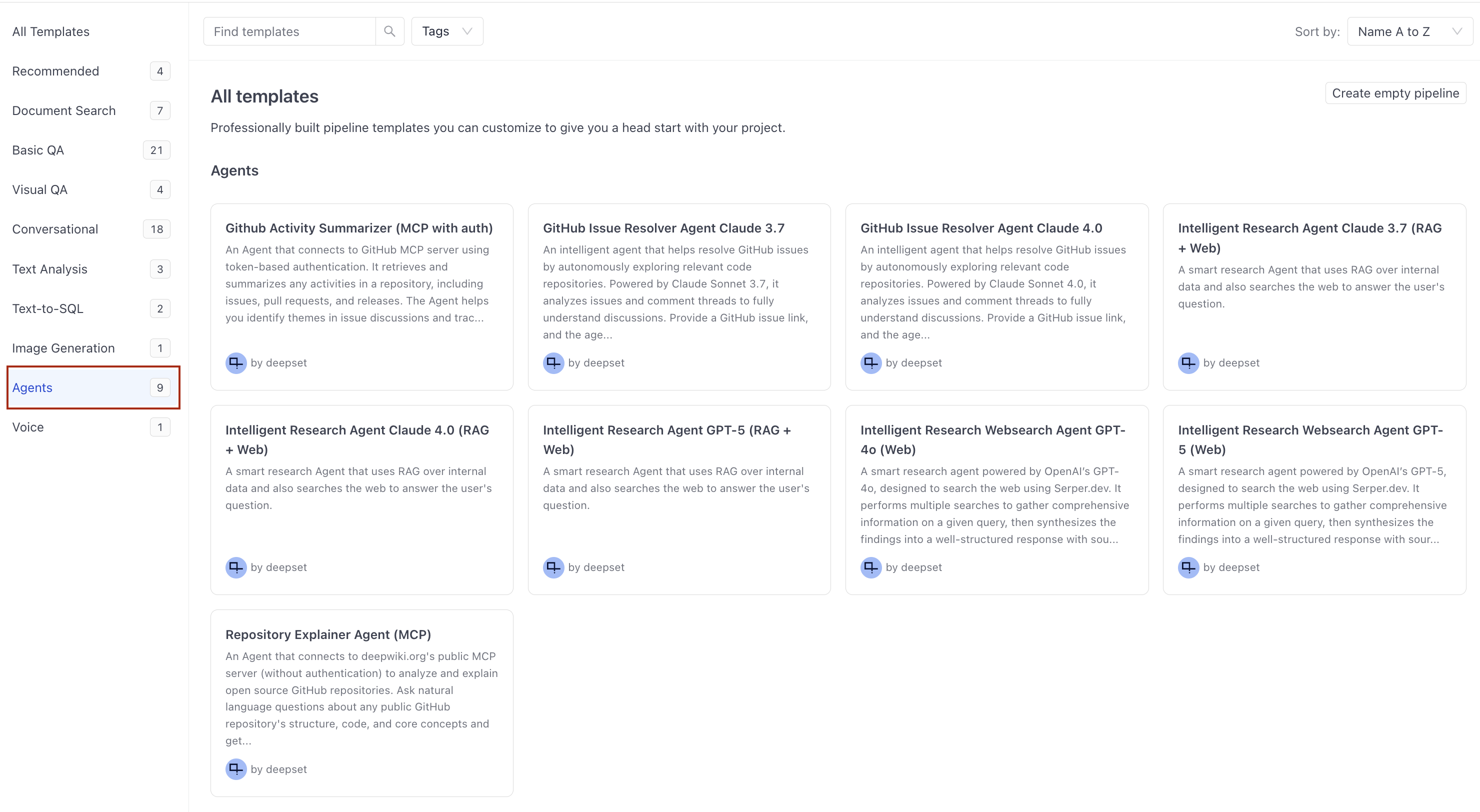

Available Pipeline Templates

Haystack Platform offers multiple Agent templates to help you start building your own Agents. You can find them on the Pipeline Templates page under Agents.

To use these templates, make sure you meet the prerequisites, as described on each template details page.

Was this page helpful?