Agent Tools

Tools are a way to extend the capabilities of the Agent beyond what the LLM alone can do. They are external services the Agent can call to gather more information or perform actions that are not possible with the LLM alone. The Agent relies on the tools to resolve complex queries that require multiple steps or additional information.

Available Tools

A tool can be:

- A Haystack Platform pipeline (like a RAG pipeline on local data)

- A custom function

- An MCP server that lets the Agent access an external service

For instructions on how to add tools to the Agent, see Configuring Agent.

Pipelines as Tools

You can use Haystack Platform pipelines as tools for your Agent. This lets the Agent run entire pipelines, such as a RAG pipeline on your local data, as part of its workflow.

You can use an existing pipeline as a tool or create one from a template. When you add a pipeline as a tool, it becomes a separate copy, detached from the original pipeline. You can still access the original pipeline from the Pipelines page and continue using it as you normally would. Any changes you make to the original pipeline don't affect the tool pipeline.

You can choose how to handle pipeline inputs and outputs. The Agent can either generate the tool inputs or read them from the its state. Storing outputs in the state makes them available to other tools and components in the agent workflow. Note that the Agent's state_schema will be updated automatically accordingly.

For details, see Tools and Agent State.

Custom Code as Tools

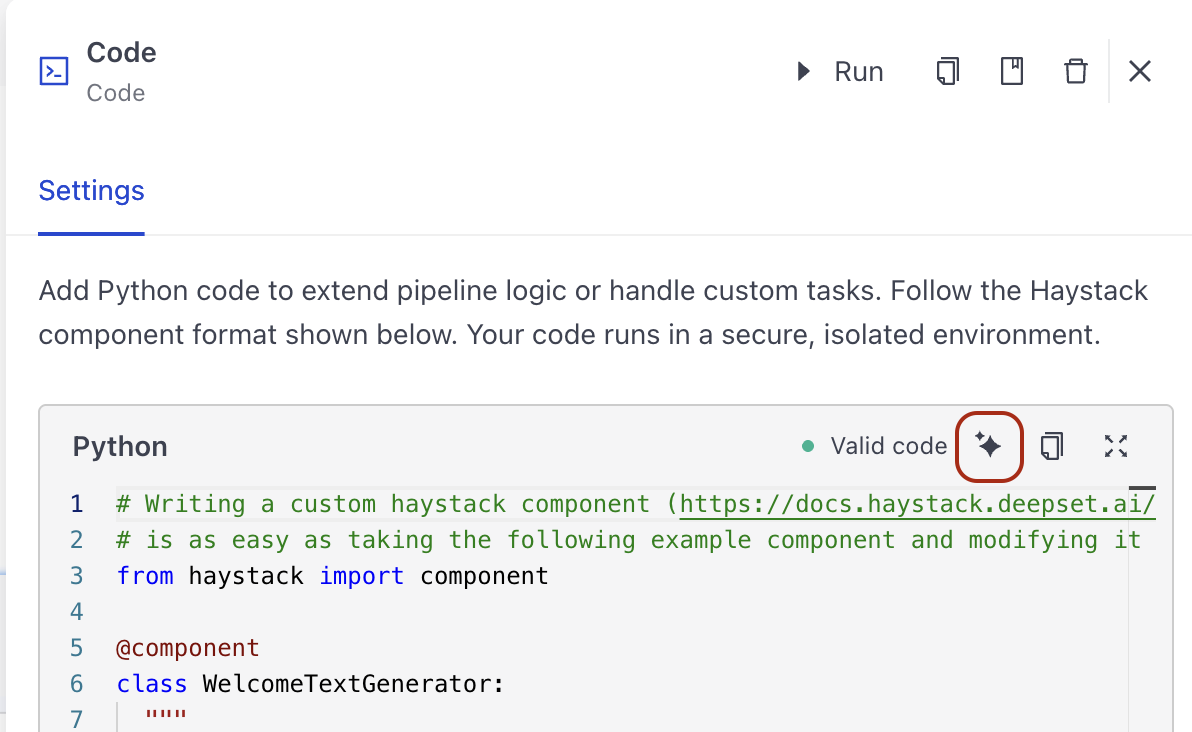

You can turn custom Python functions into tools your Agent can use. This lets you add any functionality you need to your Agent. The function becomes a tool the Agent can call when needed.

To add a custom tool, choose Code as the tool type. This opens the code editor with an example you can modify. The code must use the @tool decorator that automatically converts your function into a tool. Each tool must have a name, description, and parameters.

The @tool decorator infers the tool name, description, and parameters from the function. The Agent uses the tool's name and description to decide when to use it. Make sure these are clear and specific.

When creating your custom tool, use Python's typing.Annotated to add descriptions to parameters. This helps the Agent understand what each parameter does. For example, city: Annotated[str, "the city for which to get the weather"] = "Munich" describes the city parameter as a string and explains what it is for.

You can use the AI assistant to generate code for your custom tool. To do this, click the AI Assistant button on the tool card and write your request in the prompt.

Example: Weather Tool

This is an example of a weather tool that uses the @tool decorator:

from typing import Annotated, Literal

from haystack.tools import tool

@tool

def get_weather(

city: Annotated[str, "the city for which to get the weather"] = "Munich",

unit: Annotated[Literal["Celsius", "Fahrenheit"], "the unit for the temperature"] = "Celsius"

):

'''A simple function to get the current weather for a location.'''

return f"Weather report for {city}: 20 {unit}, sunny"

For detailed instructions on how to add a custom tool, see Add Custom Code as a Tool.

MCP Servers as Tools

MCP servers make it possible to integrate external services into the Agent through the Model Context Protocol (MCP). MCP is protocol that standardizes how AI applications communicate with external tools and services. The MCP tool supports two transport options:

- Streamable HTTP for connecting to HTTP servers

- Server-Sent Events (SSE) for connecting to servers that support SSE

Streamable HTTP sends data in pieces rather than all at once. It's like streaming a video file, you start watching it while the rest is still loading. SSE is one-way communication from the server to Haystack Platform. The server pushes real-time updates to the Agent. You can compare it to text message alerts from your bank. Once you subscribe, you get notifications when there's activity. The connections stays open and they push updates to you.

Some MCP servers require an authentication token to access the service.

You can connect only remote MCP servers. To use a local MCP server, first deploy it to a remote server.

For detailed instructions on how to add an MCP tool, see Add an MCP Tool.

Choosing the Right Tool Type

The type of tool you choose depends on what you want your Agent to do. Each tool type serves different purposes and works best for specific scenarios.

Use pipeline tools when:

- You need a multi-step workflow that combines several components

- You want to provide the Agent with access to your internal data through RAG

- The task requires a complex process like retrieval, ranking, and formatting

- You want to reuse an existing pipeline you've already built and tested

Examples:

- Use a RAG pipeline to search through company knowledge bases or internal documents

- Use a hybrid retrieval pipeline that combines keyword search, semantic search, and reranking

- Use a document processing pipeline that converts, splits, and ranks documents

Use custom code tools when:

- You need custom logic that doesn't exist as a component or pipeline

- You want to integrate with APIs or services not yet supported by Haystack Platform

- The functionality is specific to your use case or business logic

- You need simple, focused operations that don't require complex pipelines

Examples:

- Create a weather tool that calls a weather API

- Build a calculator tool for specific business calculations

- Develop a data formatter that transforms data in a custom way

- Create a tool that interacts with your internal systems or databases

Use MCP servers tools when:

- You need to integrate with external services that provide MCP servers

- You want to use third-party tools through a standardized protocol

- You need real-time data or continuous updates from external sources

- You're working with services that support Model Context Protocol

Examples:

- Connect to deepwiki.org to analyze and explain GitHub repositories

- Integrate with services that provide MCP-compatible APIs

- Access external databases or knowledge bases through MCP

- Connect to monitoring or analytics services that support MCP

Combining Multiple Tools

You can equip your Agent with multiple tools of different types. This is useful when your Agent needs to handle diverse tasks. For example:

- Repository Explainer: Use an MCP server tool to access repository data and a custom code tool to format the output

- Support Agent: Use a pipeline tool to search documentation and custom code tools for specific business logic

When combining tools, make sure each tool has a clear, distinct purpose. Give them meaningful names and descriptions so the Agent can decide which tool to use for each task.

Tool Naming and Descriptions

Your tool names and descriptions should be clear and concise, and should describe the tool's capabilities and the data it expects and produces. This helps the Agent understand what the tool does and how to use it. Make sure it's easy for the LLM to differentiate between tools and their functions.

Tools and Agent State

Tools can read from and write to the Agent's state. To learn about Agent state, see the Agent State documentation.

For pipeline tools, you can choose how to handle inputs and outputs on the agent component card, when configuring the tool. For other tools, you must configure it in the YAML. Bear with us while we're working on unifying this.

Reading from Agent State

Tools can automatically receive arguments from the Agent's state if the inputs they take match the names of the state keys. For example, if you define a repository key of type string in the Agent's state_schema and the tool's input parameters include repository: str, the Agent automatically fills that tool's parameter from the state_schema.

You can also explicitly map state keys to tool parameters to indicate which keys should be passed to the tool. To do so, use the inputs_from_state parameter when configuring the tool.

When you explicitly map tool inputs using inputs_from_state, the tool only receives the inputs you specify.

Even if the state_schema contains additional keys, the tool won't have access to them unless they're explicitly mapped.

Writing to Agent State

By default, when an Agent uses a tool, all its outputs are converted to strings and appended as a single ChatMessage with the tool role. This approach ensures that every tool result is recorded in the conversation history, making it available for the LLM in the next turn.

Additionally, you can store specific tool outputs in custom state fields using the outputs_to_state parameter. When you do this, outputs are merged into state based on their declared types in the state schema:

- List types: New values are concatenated to the existing list. If the new value isn't a list, it's converted to one first.

- Other types: The existing value is replaced with the new one.

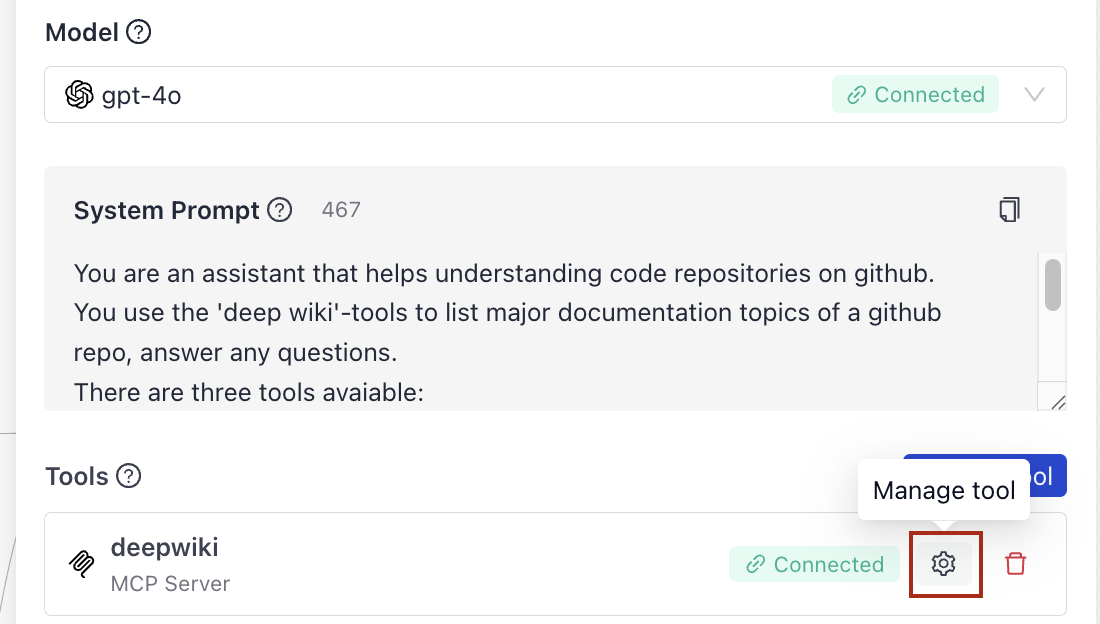

Testing and Editing Tools

You can test and edit agent tools directly from the agent component card. Open the Agent configuration panel, find the tool in the Tools section, and use Manage Tool to run or edit it.

For details, see Run Components and Pipelines in Builder

Was this page helpful?