Advanced Component Connections

Learn how to connect pipeline components in cases that go beyond simple matching. This includes handling incompatible connections or setting up complex routing.

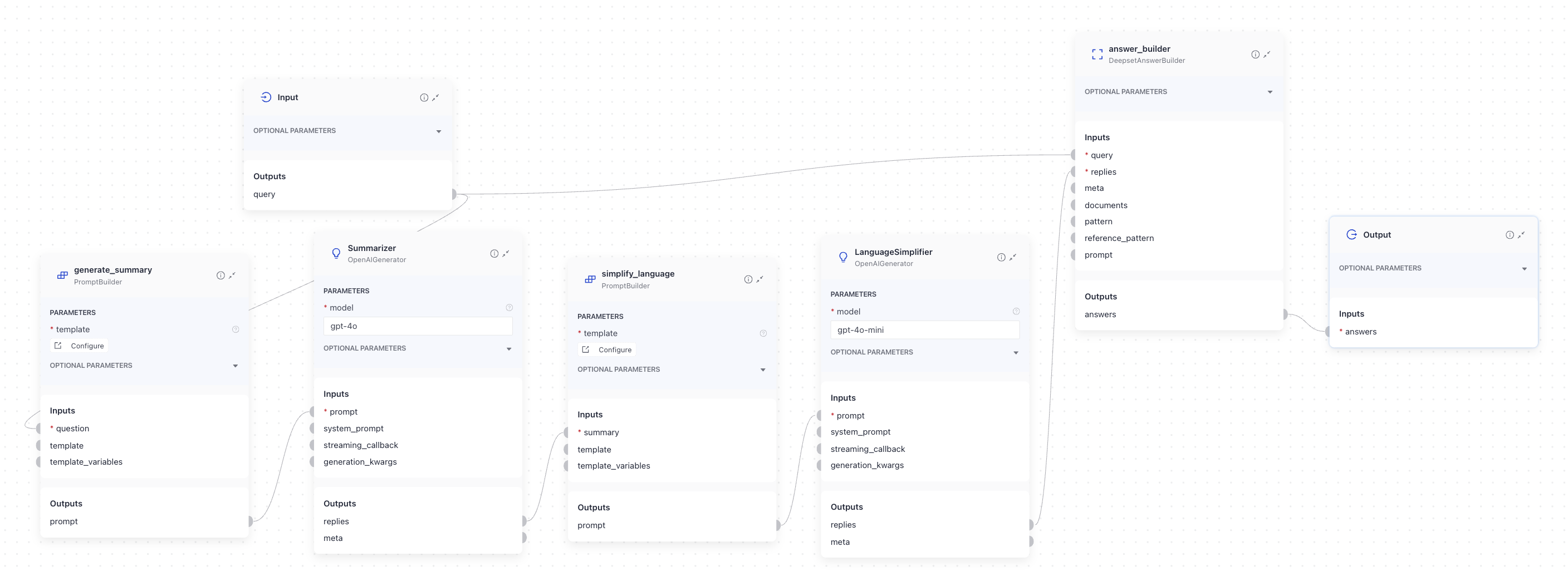

Connecting Two LLMs

You can connect the output of one LLM to the PromptBuilder connected to the next LLM. All that's needed is a placeholder in the prompt template of the second PromptBuilder for the output of the first LLM. Let's say you're building a pipeline that explains and simplifies complex contracts, such as bank loan contracts. The pipeline could include these steps:

- Summarize the contract: The first LLM summarizes the contract.

- Simplify the language: The second LLM simplifies the summarized contract.

You could use the following components:

- First PromptBuilder: Creates a prompt that includes the contract text and instructions for summarizing it. This

PromptBuilderwould receive the query (the contract to simplify). - First Generator: Takes the prompt and generates a summary of the contract.

- Second PromptBuilder: Prepares a prompt with the summary and instructions for simplifying the language.

- Second Generator: Processes the prompt and produces a simplified version of the summary.

- AnswerBuilder: Converts the Generator’s replies into

GeneratedAnswerobjects, which are then output as the final answer.

Example Pipeline Flow

Here’s how the components connect to create the pipeline:

- The contract text is sent to the first

PromptBuilder. - The first

Generatorcreates a summary based on the prompt. - The second

PromptBuilderreceives the summary and adds it to the prompt for the second Generator. - The second

Generatorsimplifies the language of the summary. - The

AnswerBuilderstructures the simplified response as the final output.

Here is the pipeline:

YAML configuration

components:

LanguageSimplifier:

type: haystack.components.generators.openai.OpenAIGenerator

init_parameters:

model: gpt-4o-mini

streaming_callback: null

api_base_url: null

organization: null

system_prompt: null

generation_kwargs: null

timeout: null

max_retries: null

simplify_language:

type: haystack.components.builders.prompt_builder.PromptBuilder

init_parameters:

template: |-

You are an expert at explaining complex topics to children. Your task is to rewrite this contract summary so that a 6th grader (11-12 years old) can understand it. Here is the contract summary:

{{ summary[0] }}

Follow these rules when simplifying the summary:

1. LANGUAGE RULES

- Use simple words that a 6th grader knows

- Keep sentences short (15 words or less)

- Use active voice instead of passive voice

- Replace legal terms with everyday words

- Define any complex words you must use

- Use "you" and "they" instead of formal names when possible

2. EXPLANATION STYLE

- Start each section with "This part is about..."

- Use real-life examples that kids understand

- Compare complex ideas to everyday situations

- Break long explanations into smaller chunks

- Use bullet points for lists

- Add helpful "Think of it like..." comparisons

3. ORGANIZATION

- Group similar ideas together

- Use friendly headers like "Money Stuff" instead of "Financial Terms"

- Put the most important information first

- Use numbered lists for steps or sequences

- Add transition words (first, next, finally)

4. MAKE IT RELATABLE

- Compare contract parts to things kids know:

* Compare deadlines to homework due dates

* Compare payments to allowance

* Compare obligations to classroom rules

* Compare penalties to time-outs

* Compare termination to quitting a sports team

5. REQUIRED SECTIONS

Simplify each of these areas:

- Who is involved (like players on a team)

- What each person must do (like chores)

- Money and payments (like saving allowance)

- Important dates (like calendar events)

- Rules everyone must follow (like game rules)

- What happens if someone breaks the rules

- How to end the agreement

- How to solve disagreements

6. FORMAT YOUR ANSWER LIKE THIS:

"Hi! I'm going to explain this contract in a way that's easy to understand. Think of a contract like a written promise between people or companies.

[Then break down each section with simple explanations and examples]

Remember: Even though we're making this simple, all parts of the original summary must be included - just explained in a kid-friendly way!"

IMPORTANT REMINDERS:

- Don't leave out any important information just because it's complex

- Double-check that your explanations are accurate

- Keep a friendly, encouraging tone

- Use emoji or simple symbols to mark important points (⭐, 📅, 💰, ⚠️)

- Break up long paragraphs

- End with a simple summary of the most important points

Your goal is to make the contract summary so clear that a 6th grader could explain it to their friend. Imagine you're the cool teacher who makes complicated stuff fun and easy to understand!

required_variables: null

variables: null

generate_summary:

type: haystack.components.builders.prompt_builder.PromptBuilder

init_parameters:

template: |-

You are a legal document analyzer specializing in contract summarization. Your task is to analyze and summarize the following contract comprehensively.

Contract to analyze: {{ contract }}

Provide a structured summary that includes ALL of the following components:

1. BASIC INFORMATION

- Contract type

- Parties involved (full legal names)

- Effective date

- Duration/term of contract

- Governing law/jurisdiction

2. KEY OBLIGATIONS

- List all major responsibilities for each party

- Highlight any conditional obligations

- Note any performance metrics or standards

- Identify delivery or service deadlines

- Specify any reporting requirements

3. FINANCIAL TERMS

- Payment amounts and schedules

- Currency specifications

- Payment methods

- Late payment penalties

- Price adjustment mechanisms

- Any additional fees or charges

4. CRITICAL DATES & DEADLINES

- Contract start date

- Contract end date

- Key milestone dates

- Notice period requirements

- Renewal deadlines

- Termination notice periods

5. TERMINATION & AMENDMENTS

- Termination conditions

- Early termination rights

- Amendment procedures

- Notice requirements

- Consequences of termination

6. WARRANTIES & REPRESENTATIONS

- List all warranties provided

- Duration of warranties

- Warranty limitations

- Representations made by each party

- Disclaimer provisions

7. LIABILITY & INDEMNIFICATION

- Liability limitations

- Indemnification obligations

- Insurance requirements

- Force majeure provisions

- Exclusions and exceptions

8. CONFIDENTIALITY & IP

- Scope of confidential information

- Duration of confidentiality

- Intellectual property rights

- Usage restrictions

- Return/destruction requirements

9. DISPUTE RESOLUTION

- Resolution process

- Arbitration requirements

- Mediation procedures

- Jurisdiction

- Governing law

10. SPECIAL PROVISIONS

- Any unique or unusual terms

- Special conditions

- Specific industry requirements

- Regulatory compliance requirements

- Any attachments or referenced documents

Format your response as follows:

- Use clear headings for each section

- Use bullet points for individual items

- Bold any critical terms, dates, or amounts

- Include section references from the original contract

- Note if any standard section appears to be missing from the contract

IMPORTANT GUIDELINES:

- If any of the above sections are not present in the contract, explicitly note their absence

- Flag any unusual or potentially problematic clauses

- Highlight any ambiguous terms that might need clarification

- Include exact quotes for crucial legal language

- Note any apparent gaps or inconsistencies in the contract

- Identify any terms that appear to deviate from standard industry practice

The summary must be comprehensive enough to serve as a quick reference for all material aspects of the contract while highlighting any areas requiring special attention or clarification.

required_variables: null

variables: null

Summarizer:

type: haystack.components.generators.openai.OpenAIGenerator

init_parameters:

model: gpt-4o-mini

AnswerBuilder:

type: haystack.components.builders.answer_builder.AnswerBuilder

init_parameters:

pattern: null

reference_pattern: null

connections:

- sender: generate_summary.prompt

receiver: Summarizer.prompt

- sender: simplify_language.prompt

receiver: LanguageSimplifier.prompt

- sender: LanguageSimplifier.replies

receiver: AnswerBuilder.replies

- sender: Summarizer.replies

receiver: simplify_language.summary

max_runs_per_component: 100

metadata: {}

inputs:

query:

- generate_summary.contract

- AnswerBuilder.query

outputs:

answers: AnswerBuilder.answers

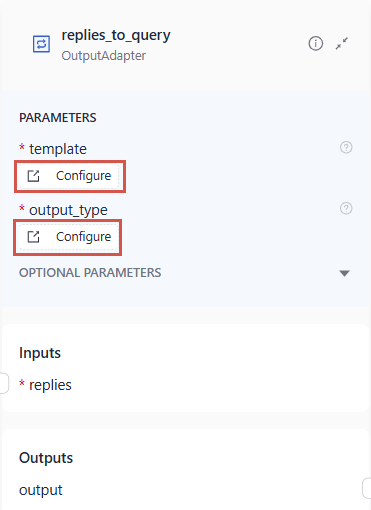

Incompatible Connection Types

For components to work together, their output and input types must match. If they don’t, you can use an OutputAdapter to bridge the gap. The OutputAdapter converts one component’s output into the format required by the next component.

Let's look at an example RAG chat pipeline available as a template in Haystack Enterprise Platform. It consists of the following steps:

- Chat summary: The first LLM receives the question together with the prompt from the

PromptBuilder. It then reformulates the question to make it more suitable for retrieval, considering any chat history context. - Retrieval: The reformulated question is sent to keyword and vector retrievers that fetch relevant documents.

- Document combination:

DocumentJoinercombines the results from both retrievers. - Document ranking: The Ranker reranks the combined documents based on their relevance to the query and sends them to the second

PromptBuilder. - Answer generation: The Generator receives the documents and the prompt from the

PromptBuilderand based on them, generates an answer. TheAnswerBuilderthen turns it into theGeneratedAnswerobject.

In the first two steps, we need to send the LLM's replies to Retrievers. repliesis a list of strings, while a Retriever only accepts a string as input (query). This means we need to use OutputAdapterto change a list of strings to a single string that the Retrievers can accept.

This is how you configure OutputAdapter to change the input type:

- Set the

templateto{{ replies[0] }}. - Set the

output_typetostr.

Then, connect the OutputAdapter to receive input from the first LLM and send the converted output (query) to:

- Keyword retriever

- Query embedder

- Ranker

- The second PromptBuilder

- AnswerBuilder

Click here to see the complete pipeline YAML

# If you need help with the YAML format, have a look at https://docs.cloud.deepset.ai/v2.0/docs/create-a-pipeline#create-a-pipeline-using-pipeline-editor.

# This section defines components that you want to use in your pipelines. Each component must have a name and a type. You can also set the component's parameters here.

# The name is up to you, you can give your component a friendly name. You then use components' names when specifying the connections in the pipeline.

# Type is the class path of the component. You can check the type on the component's documentation page.

components:

chat_summary_prompt_builder:

type: haystack.components.builders.prompt_builder.PromptBuilder

init_parameters:

template: |-

You are part of a chatbot.

You receive a question (Current Question) and a chat history.

Use the context from the chat history and reformulate the question so that it is suitable for retrieval augmented generation.

If X is followed by Y, only ask for Y and do not repeat X again.

If the question does not require any context from the chat history, output it unedited.

Don't make questions too long, but short and precise.

Stay as close as possible to the current question.

Only output the new question, nothing else!

{{ question }}

New question:

chat_summary_llm:

type: haystack.components.generators.openai.OpenAIGenerator

init_parameters:

api_key: {"type": "env_var", "env_vars": ["OPENAI_API_KEY"], "strict": False}

model: "gpt-4o"

generation_kwargs:

max_tokens: 650

temperature: 0.0

seed: 0

replies_to_query:

type: haystack.components.converters.output_adapter.OutputAdapter

init_parameters:

template: "{{ replies[0] }}"

output_type: str

bm25_retriever: # Selects the most similar documents from the document store

type: haystack_integrations.components.retrievers.opensearch.bm25_retriever.OpenSearchBM25Retriever

init_parameters:

document_store:

type: haystack_integrations.document_stores.opensearch.document_store.OpenSearchDocumentStore

init_parameters:

use_ssl: True

verify_certs: False

hosts:

- ${OPENSEARCH_HOST}

http_auth:

- "${OPENSEARCH_USER}"

- "${OPENSEARCH_PASSWORD}"

embedding_dim: 768

similarity: cosine

top_k: 20 # The number of results to return

query_embedder:

type: haystack.components.embedders.sentence_transformers_text_embedder.SentenceTransformersTextEmbedder

init_parameters:

model: "intfloat/e5-base-v2"

embedding_retriever: # Selects the most similar documents from the document store

type: haystack_integrations.components.retrievers.opensearch.embedding_retriever.OpenSearchEmbeddingRetriever

init_parameters:

document_store:

type: haystack_integrations.document_stores.opensearch.document_store.OpenSearchDocumentStore

init_parameters:

use_ssl: True

verify_certs: False

hosts:

- ${OPENSEARCH_HOST}

http_auth:

- "${OPENSEARCH_USER}"

- "${OPENSEARCH_PASSWORD}"

embedding_dim: 768

similarity: cosine

top_k: 20 # The number of results to return

document_joiner:

type: haystack.components.joiners.document_joiner.DocumentJoiner

init_parameters:

join_mode: concatenate

ranker:

type: haystack.components.rankers.transformers_similarity.TransformersSimilarityRanker

init_parameters:

model: "intfloat/simlm-msmarco-reranker"

top_k: 8

model_kwargs:

torch_dtype: "torch.float16"

qa_prompt_builder:

type: haystack.components.builders.prompt_builder.PromptBuilder

init_parameters:

template: |-

You are a technical expert.

You answer questions truthfully based on provided documents.

Ignore typing errors in the question.

For each document check whether it is related to the question.

Only use documents that are related to the question to answer it.

Ignore documents that are not related to the question.

If the answer exists in several documents, summarize them.

Only answer based on the documents provided. Don't make things up.

Just output the structured, informative and precise answer and nothing else.

If the documents can't answer the question, say so.

Always use references in the form [NUMBER OF DOCUMENT] when using information from a document, e.g. [3] for Document[3].

Never name the documents, only enter a number in square brackets as a reference.

The reference must only refer to the number that comes in square brackets after the document.

Otherwise, do not use brackets in your answer and reference ONLY the number of the document without mentioning the word document.

These are the documents:

{% for document in documents %}

Document[{{ loop.index }}]:

{{ document.content }}

{% endfor %}

Question: {{ question }}

Answer:

qa_llm:

type: haystack.components.generators.openai.OpenAIGenerator

init_parameters:

api_key: {"type": "env_var", "env_vars": ["OPENAI_API_KEY"], "strict": False}

model: "gpt-4o"

generation_kwargs:

max_tokens: 650

temperature: 0.0

seed: 0

answer_builder:

type: deepset_cloud_custom_nodes.augmenters.deepset_answer_builder.DeepsetAnswerBuilder

init_parameters:

reference_pattern: acm

connections: # Defines how the components are connected

- sender: chat_summary_prompt_builder.prompt

receiver: chat_summary_llm.prompt

- sender: chat_summary_llm.replies

receiver: replies_to_query.replies

- sender: replies_to_query.output

receiver: bm25_retriever.query

- sender: replies_to_query.output

receiver: query_embedder.text

- sender: replies_to_query.output

receiver: ranker.query

- sender: replies_to_query.output

receiver: qa_prompt_builder.question

- sender: replies_to_query.output

receiver: answer_builder.query

- sender: bm25_retriever.documents

receiver: document_joiner.documents

- sender: query_embedder.embedding

receiver: embedding_retriever.query_embedding

- sender: embedding_retriever.documents

receiver: document_joiner.documents

- sender: document_joiner.documents

receiver: ranker.documents

- sender: ranker.documents

receiver: qa_prompt_builder.documents

- sender: ranker.documents

receiver: answer_builder.documents

- sender: qa_prompt_builder.prompt

receiver: qa_llm.prompt

- sender: qa_prompt_builder.prompt

receiver: answer_builder.prompt

- sender: qa_llm.replies

receiver: answer_builder.replies

inputs: # Define the inputs for your pipeline

query: # These components will receive the query as input

- "chat_summary_prompt_builder.question"

filters: # These components will receive a potential query filter as input

- "bm25_retriever.filters"

- "embedding_retriever.filters"

outputs: # Defines the output of your pipeline

documents: "ranker.documents" # The output of the pipeline is the retrieved documents

answers: "answer_builder.answers" # The output of the pipeline is the generated answers

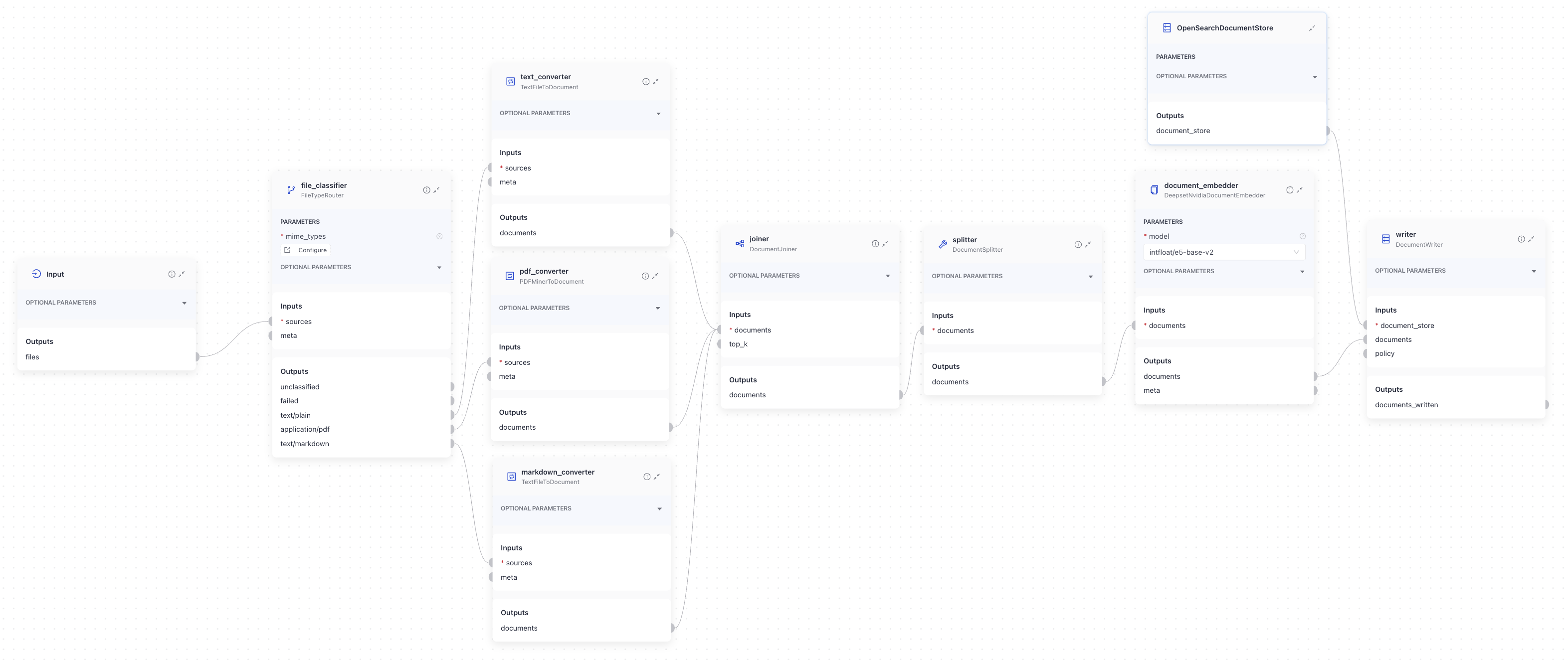

Merging Multiple Outputs

Most components only accept a single input. If you need to combine the outputs of multiple components into one input for the next step, use a component from the Joiners group. Options include AnswerJoiner, DocumentJoiner, BranchJoiner, and StringJoiner.

A common scenario involves processing files in different formats. Your indexing pipeline might include converters for various file types (for example, text, PDF, Markdown). Each converter outputs a list of documents, but a PreProcessor like DocumentSplitter can only accept a single input.

To solve this:

- Use

DocumentJoinerto merge the outputs from all your converters into a single list of documents. - Send the combined output to

DocumentSplitterfor further processing.

This setup ensures all documents, regardless of format, are processed seamlessly as a single input.

Here’s an example pipeline:

Click here to see the pipeline YAML

components:

file_classifier:

type: haystack.components.routers.file_type_router.FileTypeRouter

init_parameters:

mime_types:

- text/plain

- application/pdf

- text/markdown

text_converter:

type: haystack.components.converters.txt.TextFileToDocument

init_parameters:

encoding: utf-8

pdf_converter:

type: haystack.components.converters.pypdf.PyPDFToDocument

init_parameters:

converter: null

markdown_converter:

type: haystack.components.converters.markdown.MarkdownToDocument

init_parameters:

table_to_single_line: false

joiner:

type: haystack.components.joiners.document_joiner.DocumentJoiner

init_parameters:

join_mode: concatenate

sort_by_score: false

joiner_xlsx: # merge split documents with non-split xlsx documents

type: haystack.components.joiners.document_joiner.DocumentJoiner

init_parameters:

join_mode: concatenate

sort_by_score: false

splitter:

type: deepset_cloud_custom_nodes.preprocessors.document_splitter.DeepsetDocumentSplitter

init_parameters:

split_by: word

split_length: 250

split_overlap: 30

respect_sentence_boundary: true

language: en

document_embedder:

type: haystack.components.embedders.sentence_transformers_document_embedder.SentenceTransformersDocumentEmbedder

init_parameters:

model: "intfloat/e5-base-v2"

writer:

type: haystack.components.writers.document_writer.DocumentWriter

init_parameters:

document_store:

type: haystack_integrations.document_stores.opensearch.document_store.OpenSearchDocumentStore

init_parameters:

embedding_dim: 768

similarity: cosine

policy: OVERWRITE

connections: # Defines how the components are connected

- sender: file_classifier.text/plain

receiver: text_converter.sources

- sender: file_classifier.application/pdf

receiver: pdf_converter.sources

- sender: file_classifier.text/markdown

receiver: markdown_converter.sources

- sender: file_classifier.text/html

receiver: html_converter.sources

- sender: file_classifier.application/vnd.openxmlformats-officedocument.wordprocessingml.document

receiver: docx_converter.sources

- sender: file_classifier.application/vnd.openxmlformats-officedocument.presentationml.presentation

receiver: pptx_converter.sources

- sender: file_classifier.application/vnd.openxmlformats-officedocument.spreadsheetml.sheet

receiver: xlsx_converter.sources

- sender: text_converter.documents

receiver: joiner.documents

- sender: pdf_converter.documents

receiver: joiner.documents

- sender: markdown_converter.documents

receiver: joiner.documents

- sender: html_converter.documents

receiver: joiner.documents

- sender: docx_converter.documents

receiver: joiner.documents

- sender: pptx_converter.documents

receiver: joiner.documents

- sender: joiner.documents

receiver: splitter.documents

- sender: splitter.documents

receiver: joiner_xlsx.documents

- sender: xlsx_converter.documents

receiver: joiner_xlsx.documents

- sender: joiner_xlsx.documents

receiver: document_embedder.documents

- sender: document_embedder.documents

receiver: writer.documents

max_runs_per_component: 100

inputs: # Define the inputs for your pipeline

files: "file_classifier.sources" # This component will receive the files to index as input

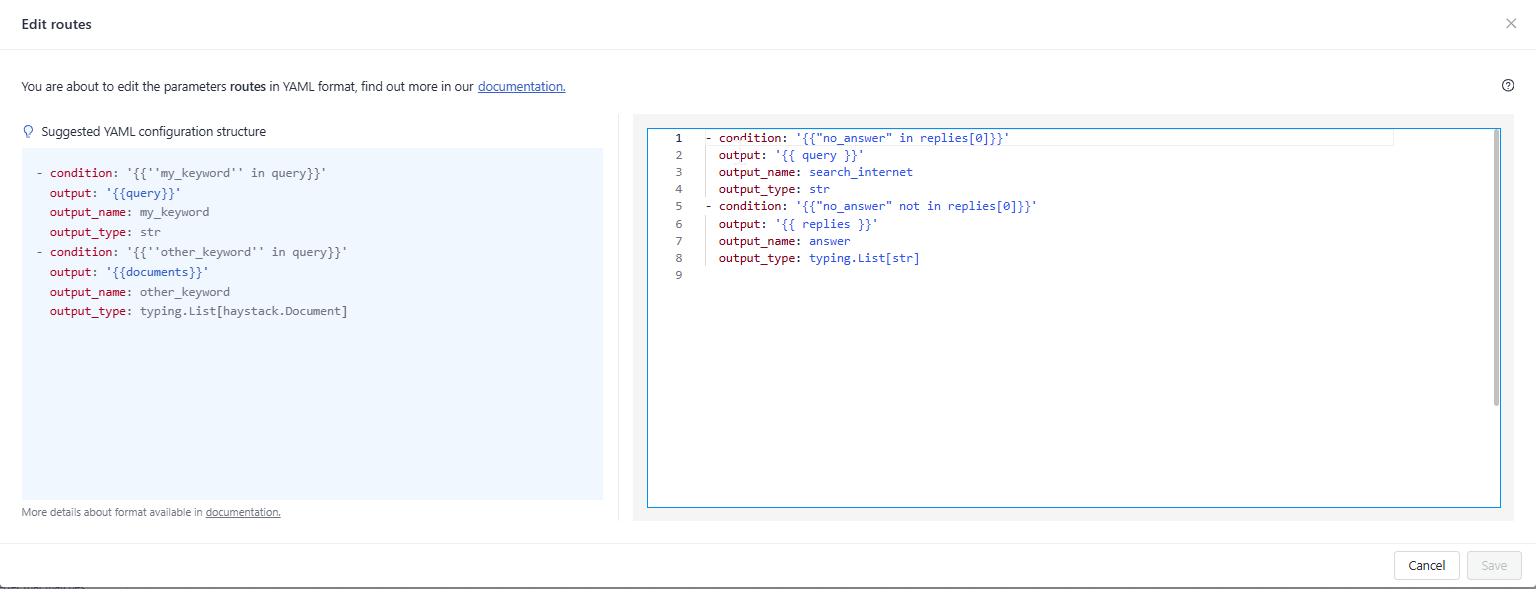

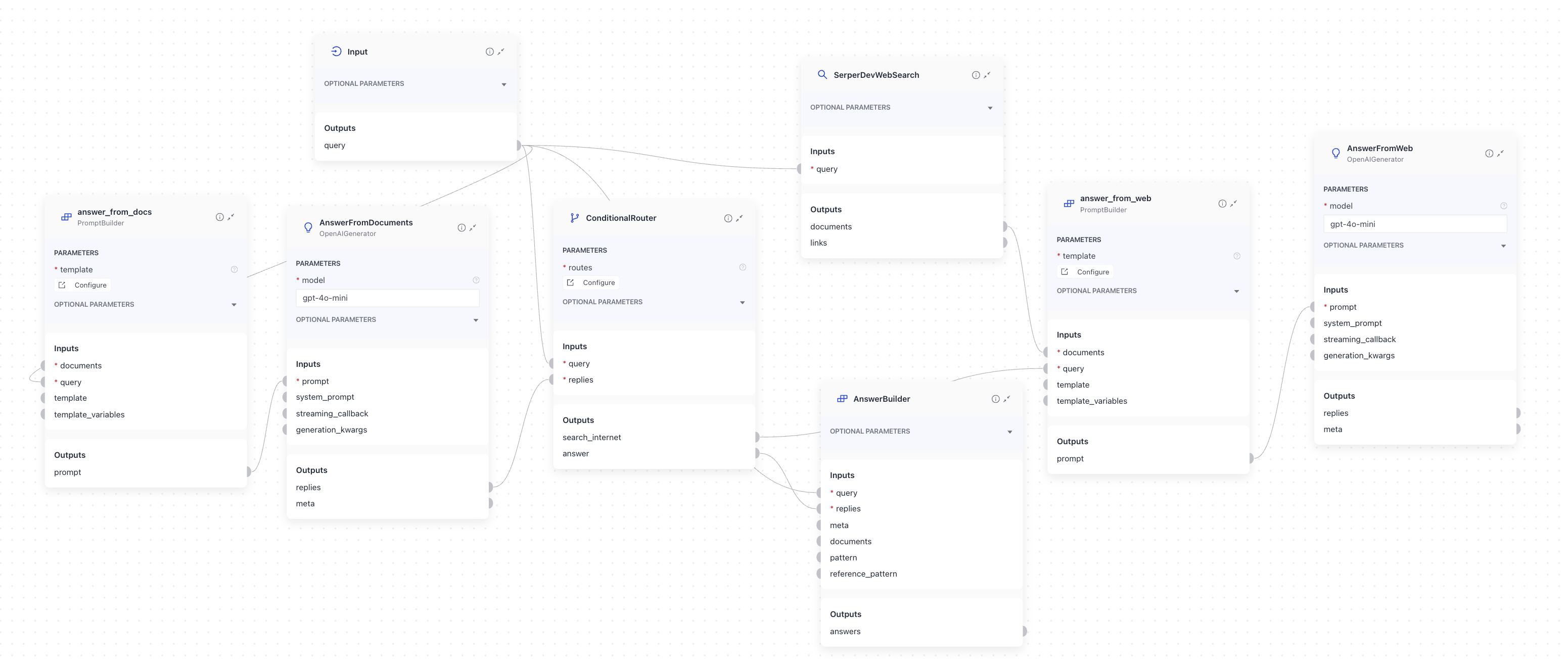

Routing Data Based on a Condition

You can route a component’s output to different branches in your pipeline based on specific conditions. The ConditionalRouter component handles this by using rules that you define.

The conditions for routing are specified in the ConditionalRouter’s routes parameter. Each route includes the following elements:

condition: A Jinja2 expression that determines if the route should be used.output: A Jinja2 expression that defines the data passed to the route.output_name: The name of the route output, used to connect to other components.output_type: The data type of the route output.

Imagine a pipeline that:

- Uses an LLM to answer user queries based on provided documents.

- Falls back to a web search if the LLM cannot find an answer.

You would need two routes:

- One for queries the LLM cannot answer (

no_answeris present). - One for queries the LLM can answer based on the documents.

This is how you could configure the routes:

- condition: '{{"no_answer" in replies[0]}}'

output: '{{ query }}'

output_name: search_internet

output_type: str

- condition: '{{"no_answer" not in replies[0]}}'

output: '{{ replies }}'

output_name: answer

output_type: typing.List[str]

The first route is used if no_answer appears in the LLM’s replies. This route outputs the query and is labeled search_internet. The second route is used if no_answer is not present. This route outputs the LLM’s replies and is labeled answer.

In this setup:

- A

Generatoris configured to reply withno_answerif it cannot find an answer based on the documents. - The

ConditionalRouterchecks the Generator's reply and decides:- If the reply contains

no_answer, the query is sent to theSerperDevWebSearchcomponent to search the internet for an answer. - If

no_answeris not present, the reply is sent to theAnswerBuilderto format and output the Generator’s response.

- If the reply contains

This is what this part of the pipeline could look like:

YAML configuration

components:

AnswerFromDocuments:

type: haystack.components.generators.openai.OpenAIGenerator

init_parameters:

model: gpt-4o-mini

streaming_callback: null

api_base_url: null

organization: null

system_prompt: null

generation_kwargs: null

timeout: null

max_retries: null

SerperDevWebSearch: # to use this component, you need a SerperDev API key

type: haystack.components.websearch.serper_dev.SerperDevWebSearch

init_parameters:

api_key:

type: env_var

env_vars:

- serper_dev

strict: false

top_k: 5

allowed_domains: null

search_params: null

answer_from_web:

type: haystack.components.builders.prompt_builder.PromptBuilder

init_parameters:

template: |-

Answer the following query given the documents retrieved from the web.

Your answer shoud indicate that your answer was generated from websearch.

Query: {{query}}

Documents:

{% for document in documents %}

{{document.content}}

{% endfor %}

"""

required_variables: null

variables: null

AnswerFromWeb:

type: haystack.components.generators.openai.OpenAIGenerator

init_parameters:

model: gpt-4o-mini

streaming_callback: null

api_base_url: null

organization: null

system_prompt: null

generation_kwargs: null

timeout: null

max_retries: null

ConditionalRouter:

type: haystack.components.routers.conditional_router.ConditionalRouter

init_parameters:

routes:

- condition: '{{"no_answer" in replies[0]}}'

output: '{{ query }}'

output_name: search_internet

output_type: str

- condition: '{{"no_answer" not in replies[0]}}'

output: '{{ replies }}'

output_name: answer

output_type: typing.List[str]

custom_filters: {}

unsafe: false

validate_output_type: false

answer_from_docs:

type: haystack.components.builders.prompt_builder.PromptBuilder

init_parameters:

template: |-

Answer the following query given the documents.

If the answer is not contained within the documents reply with 'no_answer'

Query: {{query}}

Documents:

{% for document in documents %}

{{document.content}}

{% endfor %}

required_variables: null

variables: null

OpenSearchEmbeddingRetriever:

type: haystack_integrations.components.retrievers.opensearch.embedding_retriever.OpenSearchEmbeddingRetriever

init_parameters:

document_store:

type: haystack_integrations.document_stores.opensearch.document_store.OpenSearchDocumentStore

init_parameters:

use_ssl: true

verify_certs: false

hosts:

- ${OPENSEARCH_HOST}

http_auth:

- ${OPENSEARCH_USER}

- ${OPENSEARCH_PASSWORD}

embedding_dim: 768

similarity: cosine

filters: null

top_k: 10

filter_policy: replace

custom_query: null

raise_on_failure: true

efficient_filtering: false

AnswerJoiner:

type: haystack.components.joiners.answer_joiner.AnswerJoiner

init_parameters:

join_mode: concatenate

top_k: null

sort_by_score: false

AnswerBuilder:

type: haystack.components.builders.answer_builder.AnswerBuilder

init_parameters:

pattern: null

reference_pattern: null

AnswerBuilder_1:

type: haystack.components.builders.answer_builder.AnswerBuilder

init_parameters:

pattern: null

reference_pattern: null

SentenceTransformersTextEmbedder:

type: haystack.components.embedders.sentence_transformers_text_embedder.SentenceTransformersTextEmbedder

init_parameters:

model: intfloat/e5-base-v2

device: null

token: null

prefix: ''

suffix: ''

batch_size: 32

progress_bar: true

normalize_embeddings: false

trust_remote_code: false

truncate_dim: null

model_kwargs: null

tokenizer_kwargs: null

config_kwargs: null

precision: float32

connections:

- sender: answer_from_web.prompt

receiver: AnswerFromWeb.prompt

- sender: AnswerFromDocuments.replies

receiver: ConditionalRouter.replies

- sender: SerperDevWebSearch.documents

receiver: answer_from_web.documents

- sender: ConditionalRouter.search_internet

receiver: answer_from_web.query

- sender: answer_from_docs.prompt

receiver: AnswerFromDocuments.prompt

- sender: OpenSearchEmbeddingRetriever.documents

receiver: answer_from_docs.documents

- sender: ConditionalRouter.answer

receiver: AnswerBuilder.replies

- sender: AnswerFromWeb.replies

receiver: AnswerBuilder_1.replies

- sender: AnswerBuilder.answers

receiver: AnswerJoiner.answers

- sender: AnswerBuilder_1.answers

receiver: AnswerJoiner.answers

- sender: SentenceTransformersTextEmbedder.embedding

receiver: OpenSearchEmbeddingRetriever.query_embedding

max_runs_per_component: 100

metadata: {}

inputs:

query:

- ConditionalRouter.query

- answer_from_docs.query

- AnswerBuilder.query

- AnswerBuilder_1.query

- SerperDevWebSearch.query

- SentenceTransformersTextEmbedder.text

outputs:

answers: AnswerJoiner.answers

Was this page helpful?