Use Azure Document Intelligence

Convert files to documents using the Azure's Document Intelligence service.

Azure Document Intelligence extracts text from files in the following formats:

- JPEG

- PNG

- BMP

- TIFF

- DOCX

- XLSX

- PPTX

- HTML

For more details on the service capabilities, see the Azure Document Intelligence website. For a list of models you can use to process your files, see model overview in Document Intelligence documentation.

Prerequisites

You need an API key from your Azure account with the Document Intelligence resource. For details, see Get started wtih Document Intelligence in Azure documentation.

Use Azure Document Intelligence

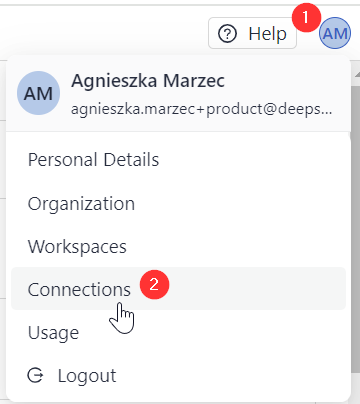

First, connect deepset Cloud to Azure Document Intelligence through the Connections page:

-

Click your initials in the top right corner and select Connections.

-

Click Connect next to the provider.

-

Enter your user access token and submit it.

Then, add the AzureOCRDocumentConverter component to your indexing pipeline.

Usage Examples

This is an example of an indexing pipeline that uses Azure's OCR converter to process PDF files:

components:

file_classifier:

type: haystack.components.routers.file_type_router.FileTypeRouter

init_parameters:

mime_types:

- text/plain

- application/pdf

- text/markdown

- text/html

- application/vnd.openxmlformats-officedocument.wordprocessingml.document

- application/vnd.openxmlformats-officedocument.presentationml.presentation

text_converter:

type: haystack.components.converters.txt.TextFileToDocument

init_parameters:

encoding: utf-8

ocr_converter: #This is the Azure OCR converter

type: haystack.components.converters.azure.AzureOCRDocumentConverter

init_parameters:

api_key: {"type": "env_var", "env_vars": ["AZURE_AI_API_KEY"], "strict": false}

endpoint: "YOUR-ENDPOINT"

model_id: "prebuilt-read"

page_layout: "natural"

markdown_converter:

type: haystack.components.converters.markdown.MarkdownToDocument

init_parameters:

table_to_single_line: false

html_converter:

type: haystack.components.converters.html.HTMLToDocument

init_parameters:

# A dictionary of keyword arguments to customize how you want to extract content from your HTML files.

# For the full list of available arguments, see

# the [Trafilatura documentation](https://trafilatura.readthedocs.io/en/latest/corefunctions.html#extract).

extraction_kwargs:

output_format: txt # Extract text from HTML. You can also also choose "markdown"

target_language: null # You can define a language (using the ISO 639-1 format) to discard documents that don't match that language.

include_tables: true # If true, includes tables in the output

include_links: false # If true, keeps links along with their targets

docx_converter:

type: haystack.components.converters.docx.DOCXToDocument

init_parameters: {}

pptx_converter:

type: haystack.components.converters.pptx.PPTXToDocument

init_parameters: {}

joiner:

type: haystack.components.joiners.document_joiner.DocumentJoiner

init_parameters:

join_mode: concatenate

sort_by_score: false

splitter:

type: deepset_cloud_custom_nodes.preprocessors.document_splitter.DeepsetDocumentSplitter

init_parameters:

split_by: word

split_length: 250

split_overlap: 30

respect_sentence_boundary: True

language: en

document_embedder:

type: haystack.components.embedders.sentence_transformers_document_embedder.SentenceTransformersDocumentEmbedder

init_parameters:

model: "intfloat/e5-base-v2"

writer:

type: haystack.components.writers.document_writer.DocumentWriter

init_parameters:

document_store:

type: haystack_integrations.document_stores.opensearch.document_store.OpenSearchDocumentStore

init_parameters:

embedding_dim: 768

similarity: cosine

policy: OVERWRITE

connections: # Defines how the components are connected

- sender: file_classifier.text/plain

receiver: text_converter.sources

- sender: file_classifier.application/pdf

receiver: ocr_converter.sources # Azure converter receives PDF files

- sender: file_classifier.text/markdown

receiver: markdown_converter.sources

- sender: file_classifier.text/html

receiver: html_converter.sources

- sender: file_classifier.application/vnd.openxmlformats-officedocument.wordprocessingml.document

receiver: docx_converter.sources

- sender: file_classifier.application/vnd.openxmlformats-officedocument.presentationml.presentation

receiver: pptx_converter.sources

- sender: text_converter.documents

receiver: joiner.documents

- sender: ocr_converter.documents #It then sends the resulting documents to DocumentJoiner

receiver: joiner.documents

- sender: markdown_converter.documents

receiver: joiner.documents

- sender: html_converter.documents

receiver: joiner.documents

- sender: docx_converter.documents

receiver: joiner.documents

- sender: pptx_converter.documents

receiver: joiner.documents

- sender: joiner.documents

receiver: splitter.documents

- sender: splitter.documents

receiver: document_embedder.documents

- sender: document_embedder.documents

receiver: writer.documents

max_loops_allowed: 100

inputs: # Define the inputs for your pipeline

files: "file_classifier.sources" # This component will receive the files to index as input

Updated about 1 month ago