Evaluating Your Pipeline

After you create a pipeline, it’s time to check how it performs. You do it by collecting user feedback and by running experiments, which are a systematic way of evaluating your pipeline. Based on the results, you can then decide how to improve it.

Why Should I Evaluate?

Before bringing your pipeline to production, you want to make sure it's useful, provides relevant results, and serves the intended purpose. By evaluating your app, you can determine how effective it is and how satisfied the users are.

Metrics to Consider

Understand your use case and consider what’s important for your pipeline to work well. Which metrics do you want to focus on? For example, in a simple document search where you show five results, it may be fine if two of them are irrelevant and their order is not important. In such a case, you may want to focus on recall rather than mean reciprocal rank.

On the other hand, if you have a RAG pipeline with document retrieval as its first stage, you want all retrieved documents to be relevant because the large language model may have a limited context length. In such a case, your most important metric may be precision.

Having said that, remember that metrics are just a tool to help you evaluate your system. It may happen that the metrics' values seem insufficient, but the users are happy with your pipeline, and that’s fine as well.

For an in-depth explanation of the metrics, see Evaluation Metrics.

Evaluation Overview

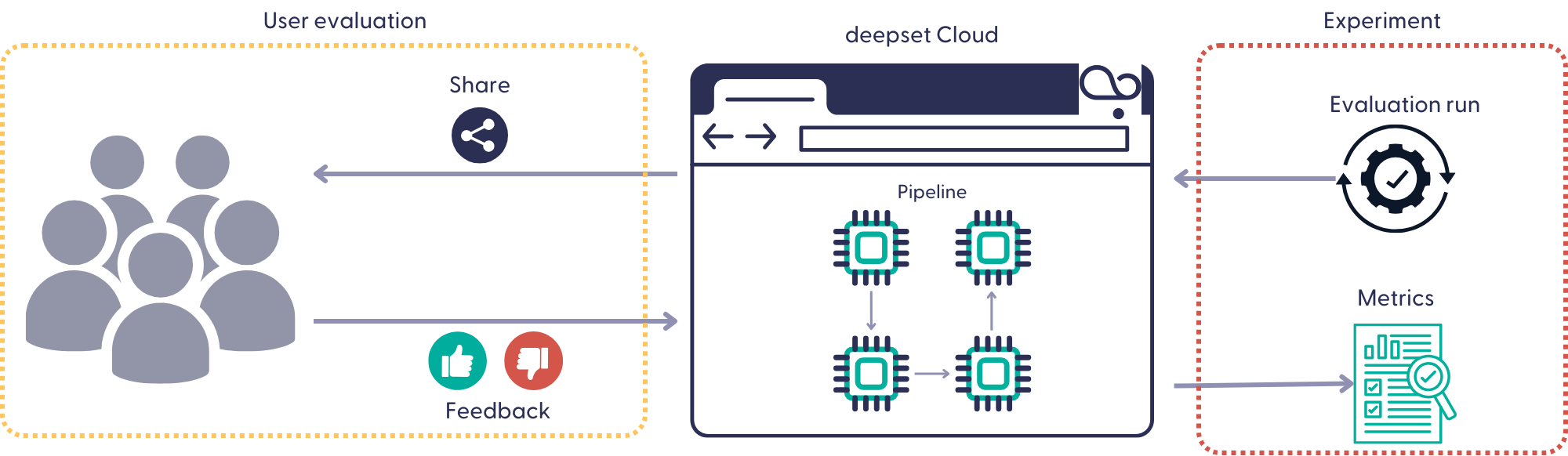

There are two main stages to evaluating a pipeline:

- Evaluating with users

- Running experiments

Your pipeline is meant to serve the users, so it’s important to know how it works for them and if they’re happy with the results it returns. You can share your pipeline prototype with external users and collect their feedback in deepset Cloud interface.

Apart from knowing how the users like your pipeline, it’s also useful to evaluate it systematically based on metrics. This is what experiments are for. During an experiment, your system runs against an evaluation dataset, and as a result, you get detailed metrics for your system as a whole but also its components in isolation. This can give you valuable cues as to how to improve it.

Detailed Steps

Here’s a breakdown of the steps we recommend you take to evaluate your pipeline:

- Upload files to your workspace. The pipeline you’re evaluating will run on these files. The more files you upload, the more difficult the task of finding the right answer is.

- Create a pipeline. You can start with one of the ready-made templates.

- Share your pipeline with users. At this stage, you want to get an idea of how people use your pipeline and if it works OK. You’re not aiming for high user satisfaction at this point. You just want to determine if it’s ready for an evaluation through experiments. If most of your users are happy with the results, it means it’s good to go. Otherwise, try tweaking your app until its performance is satisfactory.

The questions users ask at this stage can also help you prepare the evaluation set for experiments. - Run an experiment:

- Prepare an evaluation set and upload it to your deepset Cloud workspace.

If you're evaluating a document search pipeline, you can use a labeling project to create an evaluation dataset.

If you're evaluating a RAG pipeline and you want to focus on the groundedness and no answer scores, it's enough if the evaluation dataset contains just queries. You can use the pipeline query history to obtain them. - Create an experiment run.

Note: Currently, experiments run on all files in your workspace, but we’re working on making it possible to choose a subset of files for an experiment run. - Review the experiment. Depending on the results, you may want to improve your pipeline before you give it to your users to evaluate.

- Prepare an evaluation set and upload it to your deepset Cloud workspace.

- Collect user feedback. It's best to have domain experts and qualified users try your pipeline and then use their feedback to improve it.

- Optimize and improve your pipeline.

Updated 11 months ago