Using Hosted LLMs in Your Pipelines

Use models hosted in your Cohere, OpenAI, Amazon Sagemaker, or Amazon Bedrock accounts.

Using LLMs hosted by the model provider is often the best option as it guarantees the model has sufficient infrastructure to run efficiently. deepset Cloud pipelines can use LLMs hosted in:

- Cohere

- OpenAI

- Amazon Sagemaker

- Amazon Bedrock

You use models directly in your pipelines through PromptNode. You simply pass the model name in PromptNode's model_name_or_path parameter. Check the sections below for details on how to use a model from a particular provider.

Using Cohere Models

-

Obtain the production key from your Cohere account.

-

Connect Cohere to deepset Cloud:

-

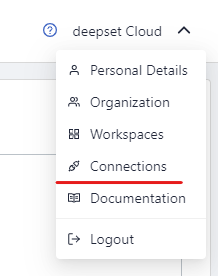

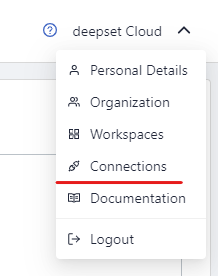

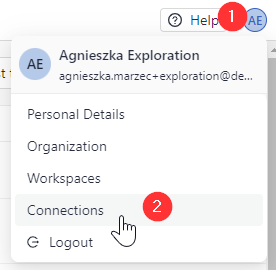

- Click your name in the top right corner and select Connections.

- Click Connect next to a model provider.

- Enter your user access token and submit it.

- Click your name in the top right corner and select Connections.

-

In your pipeline, pass the name of the Cohere model you want to use in the

model_name_or_pathparameter of PromptNode. For example, to usecommand-light, add:

components:

- name: PromptNode

type: PromptNode

params:

model_name_or_path: command-light

model_kwargs: # Specifies additional model settings

temperature: 0 # Lower temperature works best for fact-based qa

...

Using OpenAI Models

- Create a secret API key for your OpenAI account.

- Connect OpenAI to deepset Cloud:

-

- Click your name in the top right corner and select Connections.

- Click Connect next to a model provider.

- Enter your user access token and submit it.

- Click your name in the top right corner and select Connections.

- In your pipeline, pass the name of the OpenAI model you want to use in the

model_name_or_pathparameter of PromptNode. For example, to usegpt-3.5-turbo, add:

components:

- name: PromptNode

type: PromptNode

params:

model_name_or_path: gpt-3.5-turbo

model_kwargs: # Specifies additional model settings

temperature: 0 # Lower temperature works best for fact-based qa

...

Using OpenAI Models Hosted on Microsoft Azure

To use a remote model from Microsoft Azure, pass the API version, base URL, and deployment name in model_kwargs:

...

components:

- name: PromptNode

type: PromptNode

params:

model_name_or_path: gpt-3.5-turbo

api_key: <azure_openai_api_key>

default_prompt_template: <your_prompt_template>

model_kwargs:

api_version: 2022-12-01

azure_base_url: https://<your-endpoint>.openai.azure.com

azure_deployment_name: <your-deployment-name>

You can find the azure_base_url parameter in your Azure account's Keys and Endpoint tab. You choose the azure_deployment_name when you deploy a model through your Azure account in the Model deployments tab. For available models and versions for the service, check Azure documentation.

Using Amazon Sagemaker Models

You can use LLMs hosted on Amazon Sagemaker in PromptNode. Contact your deepset Cloud representative to set up the model for you. Once it's ready, you'll get the model name that you then pass in the model_name_or_path parameter of PromptNode, like this:

...

components:

- name: PromptNode

type: PromptNode

params:

model_name_or_path: <the_model_name_you_got_from_deepset_Cloud_rep>

model_kwargs:

temperature: 0.6 #these are additional model parameters that you can configure

...

Using Models Hosted on Amazon Bedrock

You can use text generation models hosted on deepset's Bedrock account or on your private Bedrock account.

Using Models Hosted in deepset's Bedrock Account

- Check the ID of the model you want to use. You can find the IDs in Amazon Documentation.

- Pass the model ID preceded by

deepset-cloudin themodel_name_or_pathparameter of PromptNode, like this:components: - name: PromptNode type: PromptNode params: model_name_or_path: deepset-cloud-anthropic.claude-v2 #This PromptNode uses the Claude 2 model hosted on Bedrock model_kwargs: temperature: 0.6 ...

Using Models Hosted in Your Private Bedrock Account

- In Amazon Identity and Access Management (IAM), create a dedicated user to connect to deepset Cloud. When creating the user, make sure you save the access key ID and secret key. For detailed instructions, see Amazon IAM User Guide.

- Give the user the following permission policies:

"bedrock:ListFoundationModels", "bedrock:GetFoundationModel", "bedrock:InvokeModel", "bedrock:InvokeModelWithResponseStream", "bedrock:GetFoundationModelAvailability", "bedrock:GetCustomModel", "bedrock:ListCustomModels" - Go back to deepset Cloud, and connect Bedrock to deepset Cloud:

-

- Click your initials in the top right corner and select Connections.

- Click Connect next to a model provider.

- Enter your user access token and submit it.

- Click your initials in the top right corner and select Connections.

-

You're now ready to use the model in your pipeline.

- Pass the model ID in the

model_name_or_pathparameter of PromptNode, like this:

components:

- name: PromptNode

type: PromptNode

params:

model_name_or_path: anthropic.claude-v2 #This PromptNode uses the Claude 2 model hosted on Bedrock

model_kwargs:

temperature: 0.6

...

Updated 4 months ago