Improving Your Question Answering Pipeline

It can happen that the experiments fail or fall short of your expectations. It's nothing that can't be fixed. This page helps you figure out how to tweak your question answering pipeline to achieve the results you want.

We'll focus on the extractive QA pipeline analysis here. If your pipeline didn't live up to your expectations, you could consider optimizing the PreProcessor, Retriever, or Reader nodes.

Optimizing the PreProcessor

The PreProcessor splits your documents into smaller ones. To improve its performance, you can:

- Change the value of the

split_lengthparameter. It's best to set this parameter to a value between 200 and 500 words. This is because there is a limit on the number of words an embedding retriever can process. The exact number depends on the model you use for your retriever, but once the document length passes that number, it cuts off the rest of the document. - Choose the correct

languagefor your documents. Setting the correct language helps NLTK tokenizers properly detect sentence boundaries. By default, the PreProcessor respects sentence boundaries when splitting documents, which means it won't split documents midway through a sentence.

Optimizing the Retriever

When evaluating the retriever, concentrate on the recall metrics, such as integrated_recall_single_hit and integrated_recall_multi_hit. You aim to get the recall metric as close to 1.0 as possible. It's good to start with BM25Retriever, as it's the standard. Here's what you can try:

- Increase the number of documents the retriever returns. Use the

top_kparameter to do that. This will increase the chances that the retriever sees a document containing the right answer. Doing this, though, slows down the search system as the reader must then check more documents. - Replace the BM25Retriever with an EmbeddingRetriever. Have a look at the Models for Information Retrieval and check if any of them outperforms the BM25Retriever.

- Combine the output of both BM25Retriever and EmbeddingRetriever. You can do this with the JoinDocuments node. If you use JoinDocuments, specify the number of documents it should return. By default, it returns all unique documents from the combined outputs of both retrievers. You must remember, though, that the more documents the reader has to go through, the slower the search is.

- To control the number of documents JoinDocuments returns, use

top_k_joinin this node. - The other thing you need to choose is the

join_mode. This controls how the two lists of results are combined. We recommendreciprocal_rank_fusion. The other options you can try areconcatenateandmerge. Thejoin_modeparameter only affects the reader results if you settop_k_joinas, by default, JoinDocuments passes all documents to the reader.

- To control the number of documents JoinDocuments returns, use

A YAML template for a pipeline with combined retrievers

components:

- name: DocumentStore

type: DeepsetCloudDocumentStore # The only supported document store in deepset Cloud

- name: BM25Retriever # The keyword-based retriever

type: BM25Retriever

params:

document_store: DocumentStore

top_k: 20 # The number of results to return

- name: EmbeddingRetriever # The dense retriever

type: EmbeddingRetriever

params:

document_store: DocumentStore

embedding_model: sentence-transformers/multi-qa-mpnet-base-dot-v1 # Model optimized for semantic search

model_format: sentence_transformers

top_k: 20 # Set the number of results to return

- name: JoinResults # Joins the results from both retrievers

type: JoinDocuments

params:

join_mode: reciprocal_rank_fusion # Applies rank-based scoring to the results

top_k_join: 20 # Set to return only the top k joined documents based on scoring defined by join_mode

- name: FileTypeClassifier # Routes files based on their extension to appropriate converters, useful if you have different file types

type: FileTypeClassifier

- name: TextConverter # Converts files into documents

type: TextConverter

- name: PDFConverter # Converts PDFs into documents

type: PDFToTextConverter

- name: Preprocessor # Splits documents into smaller ones and cleans them up

type: PreProcessor

params:

# With an embedding-based retriever, it's good to split your documents into smaller ones

split_by: word # The unit by which you want to split the documents

split_length: 250 # The max number of words in a document

split_overlap: 10 # Enables the sliding window approach

split_respect_sentence_boundary: True # Retains complete sentences in split documents

language: en

- name: Reader # The component that actually fetches answers from among the 20 documents the Retriever returns

type: FARMReader # Transformer-based reader, specializes in extractive QA

params:

model_name_or_path: deepset/roberta-base-squad2-distilled # An optimized variant of BERT, a strong all-round model

context_window_size: 700 # The size of the window around the answer span

batch_size: 50

# Here you define how the nodes are organized in the pipelines

# For each node, specify its input

pipelines:

- name: query

nodes:

- name: BM25Retriever

inputs: [Query]

- name: EmbeddingRetriever

inputs: [Query]

- name: JoinResults

inputs: [BM25Retriever, EmbeddingRetriever]

- name: Reader

inputs: [JoinResults]

- name: indexing

nodes:

# Depending on the file type, we use a Text or PDF converter

- name: FileTypeClassifier

inputs: [File]

- name: TextConverter

inputs: [FileTypeClassifier.output_1] # Ensures this converter gets TXT files

- name: PDFConverter

inputs: [FileTypeClassifier.output_2] # Ensures this converter gets PDF files

- name: Preprocessor

inputs: [TextConverter, PDFConverter]

- name: EmbeddingRetriever

inputs: [Preprocessor]

- name: DocumentStore

inputs: [EmbeddingRetriever]

Optimizing the Reader

With the reader, it's good to monitor the exact_match and the f1 metrics. They both typically go up and down in sync, and f1 often reaches a higher value than exact_match.

Finding the Right Values

Metrics give you a good indication of how your pipeline is performing. It is important, though, to also look into the predictions and let other users test your pipeline to get a better understanding of its weaknesses. To get an idea of what metric values you can achieve, check the metrics for state-of-the-art models on Hugging Face. Make sure you're checking the models trained on question answering datasets. Ideally, you should find a dataset that closely mirrors the one you're evaluating in deepset Cloud.

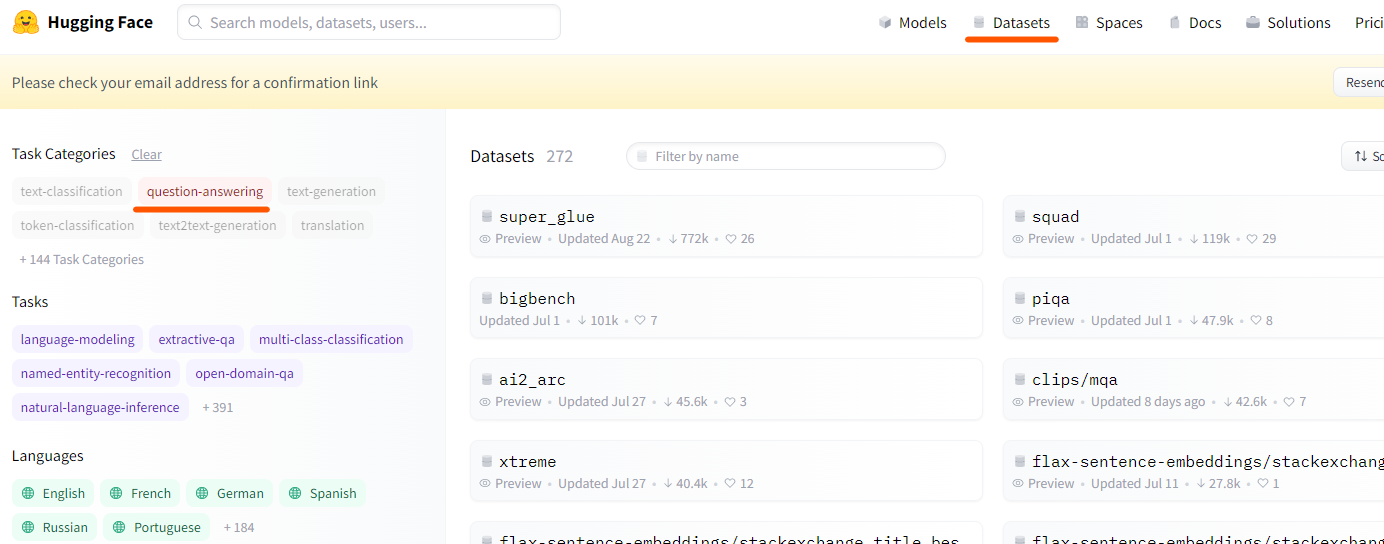

For example, to find models trained on a particular dataset in Hugging Face, go to Datasets and choose question-answering under Task Categories.

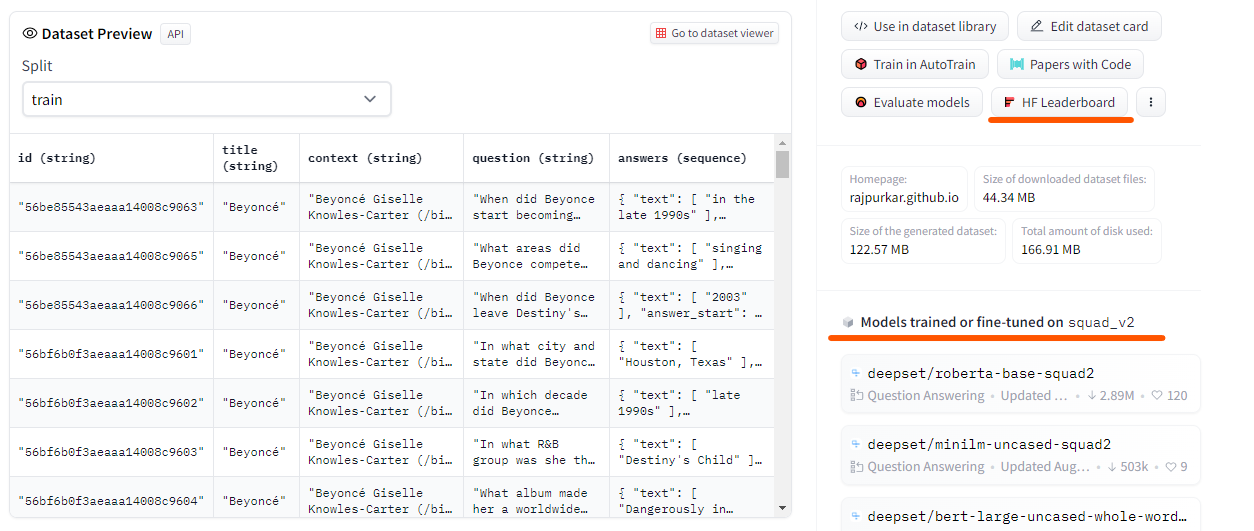

When you click a particular dataset, you can see all the models trained on it and the model leaderboard which lets you compare them.

Improving Isolated Metrics

Another thing you can try is to improve the isolated metrics for your reader. Try different reader models. For more guidance, have a look at Models for Question Answering.

When choosing a model, remember that larger models usually perform better but are slower than smaller ones. You have to decide on the balance of reader accuracy and search latency that you're OK with.

Fine-Tuning the Reader Model

If you find that none of the available models perform well enough, you can always fine-tune a model. To do that, you need to collect and annotate data. Our experience is that collecting feedback in deepset Cloud doesn't boost the reader performance as much as annotated data.

Once you have all the data, fine-tune the best-performing reader models you tried in the previous steps with the data you collected. For more information, see Tutorial: Fine-Tuning the Reader Model in SDK.

Adjusting top_k Parameters

top_k ParametersThere are a couple of top_k parameters you can change to improve the reader performance:

top_k_per_sample- defines how many answers you want to extract from each text passage. The default is 1. (A candidate document is usually split into multiple smaller passages, also known as samples.)top_k_per_candidate- defines how many answers you want to extract for each candidate document fetched by the retriever. The default is 3. Note that this is not the number of final answers you can control withtop_k.top_k- specifies the maximum number of final answers to return. The default is 10.

Note

If you increase the value of

top_k_per_sample, increasetop_k_per_candidateby a similar number. Remember, though, that this can lead to multiple answers from the same document, while you usually want to have a collection of documents in the predictions.

Reducing Duplicate Answers

If your search returns duplicate answers coming from overlapping documents, try reducing the split_overlap value of the PreProcessor node to a value around or below 10.

Choosing the Best Extractive QA Pipeline

To build a good QA pipeline, choose the retriever with the highest recall metrics and the reader model with the highest integrated metrics. Make sure the integrated metrics of the reader model are calculated while using the best retriever method.

Updated 9 months ago