Use Metadata in Your Search System

Attach metadata to the files you upload to deepset Cloud and take advantage of them in your search system. Learn about different ways you can use metadata.

Applications of Metadata

Metadata in deepset Cloud can serve as filters that narrow down the selection of documents for generating the final answer or to customize retrieval and ranking.

Let's say we have a document with the following metadata:

{

"title": "Mathematicians prove Pólya's conjecture for the eigenvalues of a disk",

"subtitle": "A 70-year old math problem proven",

"authors": ["Alice Wonderland"],

"published_date": "2024-03-01",

"category": "news"

}

We'll refer to these metadata throughout this guide to illustrate different applications.

To learn how to add metadata, see Add Metadata to Your Files.

Metadata as Filters

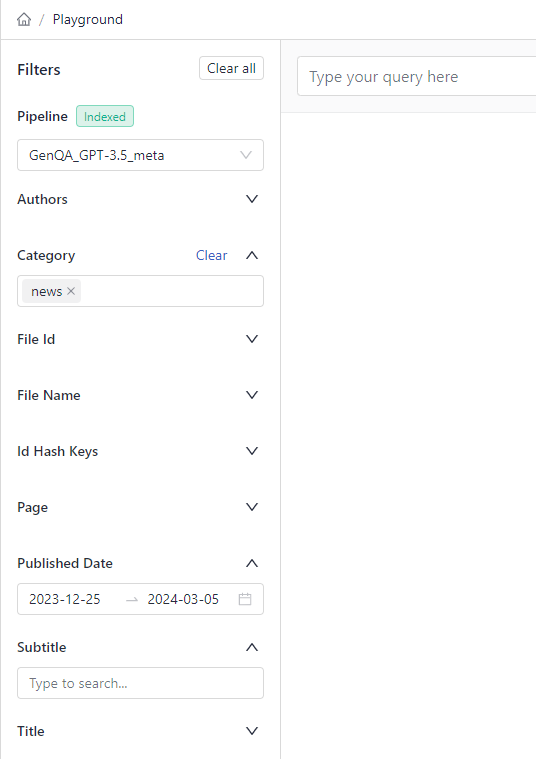

Metadata acts as filters that narrow down the scope of your search. All metadata from your files are shown as filters on the Playground:

But you can also use metadata to add a preset filter to your pipeline or when searching through REST API.

Filtering with a Preset Filter

You can configure your pipeline to use only documents with specific metadata values. Depending on whether you use BM25Retriever or CNStaticFilterEmbeddingRetriever, this involves custom OpenSearch queries or direct filter parameters.

With BM25Retriever, use the custom_query parameter to pass your OpenSearch query. For example, to retrieve only documents of the news category ("category": "news" in metadata), you could pass this query:

nameBM25Retriever

typeBM25Retriever

params

document_storeDocumentStore

top_k10

custom_query

{

"query": {

"bool": {

"must": [

{

"multi_match": {

"query": $query,

"type": "most_fields",

"fields": ["content", "title", "authors"],

"operator": "OR"

}

}

],

"filter": {

"term": {

"category": "news"

}

}

}

}

}

...

With the CNStaticFilterEmbeddingRetriever, specify your filters directly in the filters parameter, like this:

components

nameEmbeddingRetriever

typeCNStaticFilterEmbeddingRetriever

params

document_storeDocumentStore

embedding_model...

model_formatsentence_transformers

top_k20

filters"category""news"

You can also assign weight to certain metadata values to prioritize them. To learn more about using OpenSearch queries in your pipelines, see Boosting Retrieval with OpenSearch Queries.

Filtering at Query Time with REST API

When making a search request through API, add the filter to the payload when making the request like this:

curl --request POST \

--url https://api.cloud.deepset.ai/api/v1/workspaces/workspace_name/pipelines/pipeline_name/search \

--header 'accept: application/json' \

--header 'content-type: application/json' \

--data '

{

"debug": false,

"filters": {

"category": "news"

}

}

'

See also Filtering Logic.

Metadata to Customize Retrieval

By default, retrievers search only through the content of the documents in the Document Store, but with some retrievers, you can indicate the metadata fields that the retriever should check in addition to the contents.

Metadata with BM25Retriever

You can indicate the metadata fields you want the keyword-based BM25Retriever to search. Pass the names of these fields in the search_fields parameter of the Document Store used with the retriever. For example, to get an answer to a question like: "What was the latest article Alice wrote?", you may want the retriever to search through the title and the author of the documents, not only their content. Here's how you could configure BM25Retriever to do this:

components

nameDocumentStore

typeDeepsetCloudDocumentStore

params

search_fields"title" "authors"

nameRetriever

typeBM25Retriever

params

document_storeDocumentStore

Metadata with EmbeddingRetriever

EmbeddingRetriever can vectorize not only the document text but also the metadata you indicate in the embed_meta_fields parameter. In this example, EmbeddingRetriever embeds the title and subtitle of documents:

components

nameEmbeddingRetriever

typeEmbeddingRetriever

params

document_storeDocumentStore

embedding_model...

model_formatsentence_transformers

top_k20

embed_meta_fields"title" "subtitle"

This means that the title and subtitle would be prepended to the document content.

Metadata for Ranking

When using SentenceTransformersRanker, CohereRanker, and RecentnessRanker, you can assign a higher rank to documents with certain metadata values. To learn more about rankers, see Ranker.

Both SentenceTransformersRanker and CohereRanker take the embed_meta_fields parameter, where you can pass the metadata fields you want to prioritize. Like with EmbeddingRetriever, the values of these fields are then prepended to the document content and embedded there. This means that the content of the metadata fields you indicate are taken into consideration during the ranking. In this example, the Ranker prioritizes documents with fields category and authors:

components

nameReranker

typeSentenceTransformersRanker

params

model_name_or_pathsvalabs/cross-electra-ms-marco-german-uncased

top_k15

embed_meta_fieldscategory authors

...

RecentnessRanker is specifically designed to prioritize the latest documents. All you need to do is pass the name of the metadata field containing the date in the date_meta_field parameter, like this:

components

nameRecentnessRanker

typeRecentnessRanker

params

date_meta_fieldpublished_date

...

To learn more, see Improving Your Document Search Pipeline.

Metadata in Prompts

You can pass documents' metadata in prompts for additional context and then instruct the LLM to use them. To pass the metadata, use the $ (dollar sign) as a prefix and then pass the name of the metadata field in a function. This prompt, apart from the documents' contents, contains their titles and (the variables are replaced with real values at runtime). Additionally, it instructs the LLM to prefer the most recent documents:

You are a media expert.

You answer questions truthfully based on provided documents.

For each document check whether it is related to the question.

Only use documents that are related to the question to answer it.

Ignore documents that are not related to the question.

If the answer exists in several documents, summarize them.

Only answer based on the documents provided. Don't make things up.

Always use references in the form [NUMBER OF DOCUMENT] when using information from a document. e.g. [3], for Document[3].

The reference must only refer to the number that comes in square brackets after passage.

Otherwise, do not use brackets in your answer and reference ONLY the number of the passage without mentioning the word passage.

If the documents can't answer the question or you are unsure say: 'The answer can't be found in the text'.

For contradictory information, prefer recent documents.

These are the documents:

{join(documents, delimiter=new_line, pattern=new_line+'Document[$idx]:'+new_line+'Title: $title'+new_line+'Subtitle: $subtitle'+new_line+'Published Date: $published_date'+new_line+'$content')}

Question: {query}

Answer:

For more information, see Functions in prompts.

Example

Here's an example pipeline that uses metadata during the retrieval, ranking, and answer generation stages. During retrieval, it focuses on documents from the "news" category and embeds the title and subtitle into the document's text. Then, when ranking the documents, it takes the document's title and subtitle into consideration. And finally, when generating the answer, it passes the document's published date in the prompt, instructing the LLM to prefer the most recent documents.

components

nameDocumentStore

typeDeepsetCloudDocumentStore

params

embedding_dim768

similaritycosine

search_fields"title" "authors" # We also enable (sparse) search over these fields

nameBM25Retriever # The keyword-based retriever

typeBM25Retriever

params

document_storeDocumentStore

top_k10 # The number of results to return

# Custom Query to only return documents from the `news` category

custom_query

{

"query": {

"bool": {

"must": [

{

"multi_match": {

"query": $query,

"type": "most_fields",

"fields": ["content", "title", "authors"],

"operator": "OR"

}

}

],

"filter": {

"term": {

"category": "news"

}

}

}

}

}

nameEmbeddingRetriever

typeCNStaticFilterEmbeddingRetriever

params

document_storeDocumentStore

embedding_modelintfloat/e5-base-v2 # Model optimized for semantic search. It has been trained on 215M (question, answer) pairs from diverse sources.

model_formatsentence_transformers

top_k10

embed_meta_fields"title" "subtitle" # We also add these fields before embedding

filters"category""news" # Also only return documents from the `news` category

nameJoinResults # Joins the results from both retrievers

typeJoinDocuments

params

join_modeconcatenate # Combines documents from multiple retrievers

nameReranker # Uses a cross-encoder model to rerank the documents returned by the two retrievers

typeSentenceTransformersRanker

params

model_name_or_pathintfloat/simlm-msmarco-reranker # Fast model optimized for reranking

top_k5 # The number of results to return

batch_size20 # Try to keep this number equal or larger to the sum of the top_k of the two retrievers so all docs are processed at once

embed_meta_fields"title" "subtitle" # We also add these fields before embedding

model_kwargs# Additional keyword arguments for the model

torch_dtypetorch.float16

nameRecentnessRanker

typeRecentnessRanker

params

date_meta_fieldpublished_date # Rerank based on recency as determined by this metadata field

nameqa_template

typePromptTemplate

params

output_parser

typeAnswerParser

# We also refer to the metadata fields $title, $subtitle, $published_date in the prompt.

prompt

You are a media expert.

{new_line}You answer questions truthfully based on provided documents.

{new_line}For each document check whether it is related to the question.

{new_line}Only use documents that are related to the question to answer it.

{new_line}Ignore documents that are not related to the question.

{new_line}If the answer exists in several documents, summarize them.

{new_line}Only answer based on the documents provided. Don't make things up.

{new_line}Always use references in the form [NUMBER OF DOCUMENT] when using information from a document. e.g. [3], for Document[3].

{new_line}The reference must only refer to the number that comes in square brackets after passage.

{new_line}Otherwise, do not use brackets in your answer and reference ONLY the number of the passage without mentioning the word passage.

{new_line}If the documents can't answer the question or you are unsure say: 'The answer can't be found in the text'.

{new_line}For contradictory information, prefer recent documents.

{new_line}These are the documents:

{join(documents, delimiter=new_line, pattern=new_line+'Document[$idx]:'+new_line+'Title: $title'+new_line+'Subtitle: $subtitle'+new_line+'Published Date: $published_date'+new_line+'$content')}

{new_line}Question: {query}

{new_line}Answer:

namePromptNode

typePromptNode

params

default_prompt_templateqa_template

max_length400 # The maximum number of tokens the generated answer can have

model_kwargs# Specifies additional model settings

temperature0 # Lower temperature works best for fact-based qa

model_name_or_pathgpt-3.5-turbo

nameFileTypeClassifier # Routes files based on their extension to appropriate converters, by default txt, pdf, md, docx, html

typeFileTypeClassifier

nameTextConverter # Converts files into documents

typeTextConverter

namePDFConverter # Converts PDFs into documents

typePDFToTextConverter

namePreprocessor # Splits documents into smaller ones and cleans them up

typePreProcessor

params

# With a vector-based retriever, it's good to split your documents into smaller ones

split_byword # The unit by which you want to split the documents

split_length250 # The max number of words in a document

split_overlap20 # Enables the sliding window approach

languageen

split_respect_sentence_boundaryTrue # Retains complete sentences in split documents

# Here you define how the nodes are organized in the pipelines

# For each node, specify its input

pipelines

namequery

nodes

nameBM25Retriever

inputsQuery

nameEmbeddingRetriever

inputsQuery

nameJoinResults

inputsBM25Retriever EmbeddingRetriever

nameReranker

inputsJoinResults

nameRecentnessRanker

inputsReranker

namePromptNode

inputsRecentnessRanker

nameindexing

nodes

# Depending on the file type, we use a Text or PDF converter

nameFileTypeClassifier

inputsFile

nameTextConverter

inputsFileTypeClassifier.output_1 # Ensures that this converter receives txt files

namePDFConverter

inputsFileTypeClassifier.output_2 # Ensures that this converter receives PDFs

namePreprocessor

inputsTextConverter PDFConverter

nameEmbeddingRetriever

inputsPreprocessor

nameDocumentStore

inputsEmbeddingRetriever

Updated 7 months ago