Use Snowflake Database

Query data in your Snowflake database using deepset Cloud pipelines.

About this Task

deepset Cloud provides SnowflakeExecutor, which is a pipeline node that connects to your Snowflake database. It takes a SQL query as input and returns a database table that matches the query.

To make things easier, we created two text-to-SQL pipeline templates you can use to query your Snowflake databases using natural language questions. The templates use an LLM to convert your query into SQL and then send it to SnowflakeExecutor, which queries the Snowflake database. In addition to a database table, they also return a proper textual answer generated by an LLM.

For large databases, we created a template with an additional retrieval step that first pre-selects tables matching the query.

Prerequisites

You need the following information about your Snowflake database:

- Snowflake account identifier

- Snowflake user login

- Warehouse name

- Schema name

- Database name

To use our text-to-SQL templates, you need:

- OpenAI API key (the templates use the GPT-4 model)

- To use the template with an additional retrieval step, prepare database table descriptions:

- Prepare a text file with a description for each table in the database. The description can be one sentence describing the table's contents.

- To each description, add the following metadata:

{"table": "table_name", "table_columns": "column_name - data_type"}.

For example, for a table calledCOUNTRY, you would create a description file calledCOUNTRY.TXTwith the following metadata:{"table": "COUNTRY", "table_columns": "ID - INTEGER\\nNAME - VARCHAR\\nREGION - VARCHAR"}. - Upload the descriptions with their metadata to the deepset Cloud workspace where you're creating the pipeline.

Query Your Snowflake Database

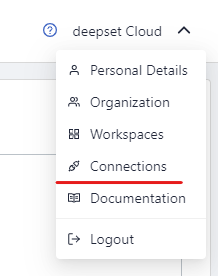

- First, connect deepset Cloud to Snowflake through the Connections page:

- Click your name in the top right corner and select Connections.

- Click Connect next to a model provider.

- Enter your user access token and submit it.

- Then, add SnowflakeExecutor to your query pipeline or use one of the ready-made text-to-SQL templates.

Usage Examples

Each tab contains an example of how to query your Snowflake database. The first tab is an example of using an LLM to convert a natural language query to SQL, and then using another LLM to generate a textual answer in addition to the database table that SnowflakeExecutor returns.

The second tab contains an additional retrieval step. To use this example, you must prepare descriptions of your database tables, as described in Prerequisites.

components:

- name: DocumentStore

type: DeepsetCloudDocumentStore # The only supported document store in deepset Cloud

- name: TextConverter # Converts files into documents

type: TextConverter

- name: sql_template

type: PromptTemplate

params:

prompt: |-

You are a SQL expert working with Snowflake.

Your task is to create a Snowflake SQL query for the given question.

Refrain from explaining your answer. Your answer must be the SQL query in plain text format without using Markdown.

Here are the table schemas:

<SCHEMAS>

User's question: {query}

Generated SQL query:

- name: PromptNodeSQLGenerator

type: PromptNode

params:

default_prompt_template: sql_template

max_length: 600 # The maximum number of tokens the generated SQL can have

model_kwargs:

# Specifies additional model settings

temperature: 0

model_name_or_path: gpt-4-turbo-preview

truncate: false

- name: SnowflakeExecutor

type: SnowflakeExecutor

params:

account: <SF-ACCOUNT>

user: <SF-USER>

warehouse: <SF-WAREHOUSE>

database: <SF-DB>

db_schema: <SF-DB-SCHEMA>

- name: display_template

type: PromptTemplate

params:

output_parser:

type: AnswerParser

prompt: |-

You are an expert data analyst.

Your role is to answer the user's question {query} using the information in the table.

You will base your response solely on the information provided in the table.

Do not rely on your knowledge base; only the data that is in the table.

Refrain from using the term "table" in your response, but instead, use the word "data"

If the table is blank say:

"The specific answer can't be found in the database. Try rephrasing your question."

Additionally, you will present the table in a tabular format and provide the SQL query used to extract the relevant rows from the database in Markdown.

If the table is larger than 10 rows, display the most important rows up to 10 rows. Your answer must be detailed and provide insights based on the question and the available data.

SQL query:

{results}

Table:

{table}

Answer:

- name: PromptNodeDisplay

type: PromptNode

params:

default_prompt_template: display_template

max_length: 4096 # The maximum number of tokens the generated answer can have

model_kwargs:

# Specifies additional model settings

temperature: 0

model_name_or_path: gpt-4-turbo-preview

truncate: false

# Here you define how the nodes are organized in the pipelines

# For each node, specify its input

pipelines:

- name: query

nodes:

- name: PromptNodeSQLGenerator

inputs: [Query]

- name: SnowflakeExecutor

inputs: [PromptNodeSQLGenerator]

- name: PromptNodeDisplay

inputs: [SnowflakeExecutor]

# Note: The indexing pipeline below is not actually used as we are not using any supporting files for the SQL generation

# But it has to be defined for dC

- name: indexing

nodes:

- name: TextConverter

inputs: [File]

- name: DocumentStore

inputs: [TextConverter]

components:

- name: DocumentStore

type: DeepsetCloudDocumentStore # The only supported document store in deepset Cloud

params:

embedding_dim: 1024

similarity: cosine

search_fields: ["table", "table_columns"]

- name: BM25Retriever # The keyword-based retriever

type: BM25Retriever

params:

document_store: DocumentStore

top_k: 20 # The number of results to return

- name: EmbeddingRetriever # Selects the most relevant documents from the document store

type: EmbeddingRetriever # Uses a Transformer model to encode the document and the query

params:

document_store: DocumentStore

embedding_model: intfloat/e5-large-v2 # Model optimized for semantic search. It has been trained on 215M (question, answer) pairs from diverse sources.

model_format: sentence_transformers

embed_meta_fields: ["table"]

top_k: 20 # The number of results to return

- name: JoinResults # Joins the results from both retrievers

type: JoinDocuments

params:

join_mode: concatenate # Combines documents from multiple retrievers

- name: Reranker # Uses a cross-encoder model to rerank the documents returned by the two retrievers

type: SentenceTransformersRanker

params:

model_name_or_path: intfloat/simlm-msmarco-reranker # Fast model optimized for reranking

top_k: 30 # The number of results to return

batch_size: 40 # Try to keep this number equal to or greater than the sum of the top_k of the two retrievers so all docs are processed at once

model_kwargs:

# Additional keyword arguments for the model

torch_dtype: torch.float16

- name: TextConverter # Converts files into documents

type: TextConverter

- name: sql_template

type: PromptTemplate

params:

prompt: |-

You are a SQL expert working with Snowflake.

Your task is to create a Snowflake SQL query for the given question.

Refrain from explaining your answer. Your answer must be the SQL query in plain text format without using Markdown.

Here are some relevant tables, a description about it, and their columns:

{join(documents, delimiter=new_line, pattern=new_line+'Table Name: $table'+new_line+'Table Description: $content'+new_line+'Table Columns:'+new_line+'$table_columns')}

User's question: {query}

Generated SQL query:

- name: PromptNodeSQLGenerator

type: PromptNode

params:

default_prompt_template: sql_template

max_length: 600 # The maximum number of tokens the generated SQL can have

model_kwargs:

# Specifies additional model settings

temperature: 0

model_name_or_path: gpt-4-turbo-preview

truncate: false

- name: SnowflakeExecutor

type: SnowflakeExecutor

params:

account: <SF-ACCOUNT>

user: <SF-USER>

warehouse: <SF-WAREHOUSE>

database: <SF-DB>

db_schema: <SF-DB-SCHEMA>

- name: display_template

type: PromptTemplate

params:

output_parser:

type: AnswerParser

prompt: |-

You are an expert data analyst.

Your role is to answer the user's question {query} using the information in the table.

You will base your response solely on the information provided in the table.

Do not rely on your knowledge base; only the data that is in the table.

Refrain from using the term "table" in your response, but instead, use the word "data"

If the table is blank say:

"The specific answer can't be found in the database. Try rephrasing your question."

Additionally, you will present the table in a tabular format and provide the SQL query used to extract the relevant rows from the database in Markdown.

If the table is larger than 10 rows, display the most important rows up to 10 rows. Your answer must be detailed and provide insights based on the question and the available data.

SQL query:

{results}

Table:

{table}

Answer:

- name: PromptNodeDisplay

type: PromptNode

params:

default_prompt_template: display_template

max_length: 4096 # The maximum number of tokens the generated answer can have

model_kwargs:

# Specifies additional model settings

temperature: 0

model_name_or_path: gpt-4-turbo-preview

truncate: false

# Here you define how the nodes are organized in the pipelines

# For each node, specify its input

pipelines:

- name: query

nodes:

- name: BM25Retriever

inputs: [Query]

- name: EmbeddingRetriever

inputs: [Query]

- name: JoinResults

inputs: [BM25Retriever, EmbeddingRetriever]

- name: Reranker

inputs: [JoinResults]

- name: PromptNodeSQLGenerator

inputs: [Reranker]

- name: SnowflakeExecutor

inputs: [PromptNodeSQLGenerator]

- name: PromptNodeDisplay

inputs: [SnowflakeExecutor]

- name: indexing

nodes:

- name: TextConverter

inputs: [File]

- name: EmbeddingRetriever

inputs: [TextConverter]

- name: DocumentStore

inputs: [ EmbeddingRetriever ]

Updated 4 months ago