Review Your Experiments

Check the details of your experiment run, such as the status, the metrics calculated, the predictions your pipeline returned, and more.

You must be an Admin to perform this task.

About This Task

You can review the following information about your experiment:

- The experiment status. If the experiment failed, check the Debug section to see what went wrong.

- The details of the experiment: the pipeline and evaluation set used.

- Metrics for pipeline components. You can see both metrics for integrated and isolated evaluation. For more information about metrics, see Experiment Metrics.

- The pipeline parameters and configuration used for this experiment. It may be different from the actual pipeline as you can update your pipeline just for an experiment run, without modifying the actual pipeline.

You can't edit your pipeline in this view. - Detailed predictions. Here you can see how your pipeline did and what answers it returned (predicted answer) compared to the expected answers. For each predicted answer, deepset Cloud displays the exact match, F1 score, and rank. The predictions are shown for each node separately.

You can export these data into a CSV file. Open the node whose predictions you want to export and click Download CSV.

Compare Experiments

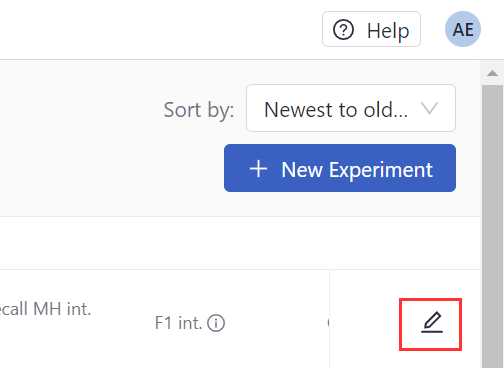

All your experiments show on the Experiments page in a table. You can configure the table colums to show the metrics you want to compare. Use the Customize the columns icon to choose the metrics.

Review an Experiment from the UI

- Log in to deepset Cloud and go to Experiments.

- Click the name of the experiment whose details you want to see. The Experiment Details page opens. You can see all the information about your experiment here.

Review an Experiment with REST API

Use the Get Eval Run API endpoint to display the results of an experiment run. You need to Generate an API Key first.

Follow this step-by-step code explanation:

▶️

Get an Experiment

Open Recipe

Or copy and modify this code:

curl --request GET \

--url https://api.cloud.deepset.ai/api/v1/workspaces/<WORKSPACE_NAME>/eval_runs/<EVAL_RUN_NAME> \

--header 'Accept: application/json' \

--header 'Authorization: Bearer <YOUR_API_KEY>'

Updated 6 months ago

Related Links