Improving Your Question Answering Pipeline

It can happen that the experiments fail or fall short of your expectations. It's nothing that can't be fixed. This page helps you figure out how to tweak your question answering pipeline to achieve the results you want.

This page focuses on the extractive QA pipeline analysis here. If your pipeline didn't live up to your expectations, you could consider optimizing PreProcessors, Retriever, or Reader.

Optimizing the Splitter

Splitters, like DocumentSplitter, split your documents into smaller ones. To improve its performance, you can change the value of the split_length parameter. It's best to set this parameter to a value between 200 and 500 words. This is because there is a limit on the number of words an embedding retriever can process. The exact number depends on the model you use for your retriever, but once the document length passes that number, it cuts off the rest of the document.

Optimizing the Retriever

When evaluating the Retriever, it's good to start with OpenSearchBM25Retriever, as it's the standard. Here's what you can try:

- Increase the number of documents the retriever returns. Use the

top_kparameter to do that. This will increase the chances that the retriever sees a document containing the right answer. Doing this, though, slows down the search system as the reader must then check more documents. - Replace the

OpenSearchBM25RetrieverwithOpenSearchEmbeddingRetriever. Have a look at the Models for Information Retrieval and check if any of them outperforms the BM25 retriever. - Combine the output of both

BM25RetrieverandEmbeddingRetriever. You can do this with theDocumentJoinercomponent. If you useDocumentJoiner, specify the number of documents it should return. By default, it returns all unique documents from the combined outputs of both retrievers. You must remember, though, that the more documents the reader has to go through, the slower the search is.- To control the number of documents

DocumentJoinerreturns, usetop_kin this node. - You also need to choose the

join_mode. This controls how the two lists of results are combined. We recommendreciprocal_rank_fusion. The other options you can try areconcatenate,merge, anddistribution_based_rank_fusion. Thejoin_modeparameter only affects the reader results if you settop_kas, by default,DocumentJoinerpasses all documents to the reader.

- To control the number of documents

A YAML template for a pipeline with combined retrievers

components:

bm25_retriever: # Selects the most similar documents from the document store

type: haystack_integrations.components.retrievers.opensearch.bm25_retriever.OpenSearchBM25Retriever

init_parameters:

document_store:

type: haystack_integrations.document_stores.opensearch.document_store.OpenSearchDocumentStore

init_parameters:

use_ssl: True

verify_certs: False

hosts:

- ${OPENSEARCH_HOST}

http_auth:

- "${OPENSEARCH_USER}"

- "${OPENSEARCH_PASSWORD}"

embedding_dim: 768

similarity: cosine

top_k: 20 # The number of results to return

query_embedder:

type: haystack.components.embedders.sentence_transformers_text_embedder.SentenceTransformersTextEmbedder

init_parameters:

model: "intfloat/e5-base-v2"

embedding_retriever: # Selects the most similar documents from the document store

type: haystack_integrations.components.retrievers.opensearch.embedding_retriever.OpenSearchEmbeddingRetriever

init_parameters:

document_store:

type: haystack_integrations.document_stores.opensearch.document_store.OpenSearchDocumentStore

init_parameters:

use_ssl: True

verify_certs: False

hosts:

- ${OPENSEARCH_HOST}

http_auth:

- "${OPENSEARCH_USER}"

- "${OPENSEARCH_PASSWORD}"

embedding_dim: 768

similarity: cosine

top_k: 20 # The number of results to return

document_joiner:

type: haystack.components.joiners.document_joiner.DocumentJoiner

init_parameters:

join_mode: concatenate

ranker:

type: haystack.components.rankers.transformers_similarity.TransformersSimilarityRanker

init_parameters:

model: "intfloat/simlm-msmarco-reranker"

top_k: 10

model_kwargs:

torch_dtype: "torch.float16"

reader:

type: haystack.components.readers.extractive.ExtractiveReader

init_parameters:

answers_per_seq: 20

calibration_factor: 1.0

max_seq_length: 384

model: "deepset/deberta-v3-large-squad2"

model_kwargs:

torch_dtype: "torch.float16"

no_answer: false

top_k: 10

connections: # Defines how the components are connected

- sender: bm25_retriever.documents

receiver: document_joiner.documents

- sender: query_embedder.embedding

receiver: embedding_retriever.query_embedding

- sender: embedding_retriever.documents

receiver: document_joiner.documents

- sender: document_joiner.documents

receiver: ranker.documents

- sender: ranker.documents

receiver: reader.documents

max_loops_allowed: 100

inputs: # Define the inputs for your pipeline

query: # These components will receive the query as input

- "bm25_retriever.query"

- "query_embedder.text"

- "ranker.query"

- "reader.query"

filters: # These components will receive a potential query filter as input

- "bm25_retriever.filters"

- "embedding_retriever.filters"

outputs: # Defines the output of your pipeline

documents: "ranker.documents" # The output of the pipeline is the retrieved documents

answers: "reader.answers" # The output of the pipeline is the extracted answers

Optimizing the Reader

Finding the Right Model

Metrics are an important indicator of your pipeline's performance, but you should also look into the predictions and let other users test your pipeline to get a better understanding of its weaknesses. To get an idea of what metric values you can achieve, check the metrics for state-of-the-art models on Hugging Face. Make sure you're checking the models trained on question answering datasets. Ideally, you should find a dataset that closely mirrors the one you're evaluating in Haystack Enterprise Platform.

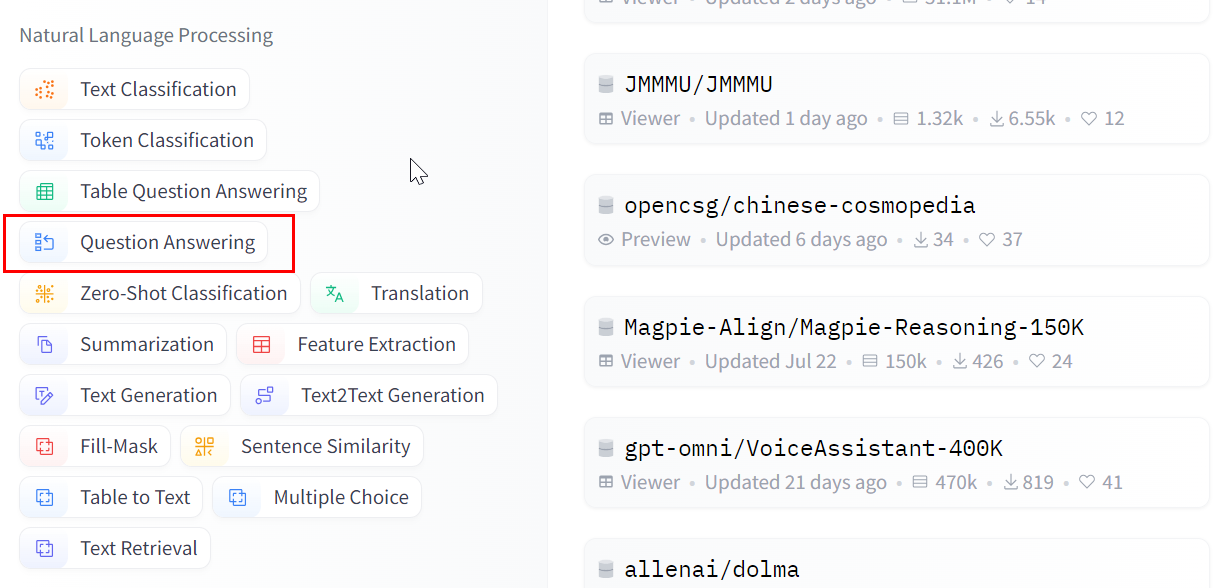

For example, to find models trained on a particular dataset in Hugging Face, go to Datasets>Tasks and choose question-answering under the Natural Language Processing category.

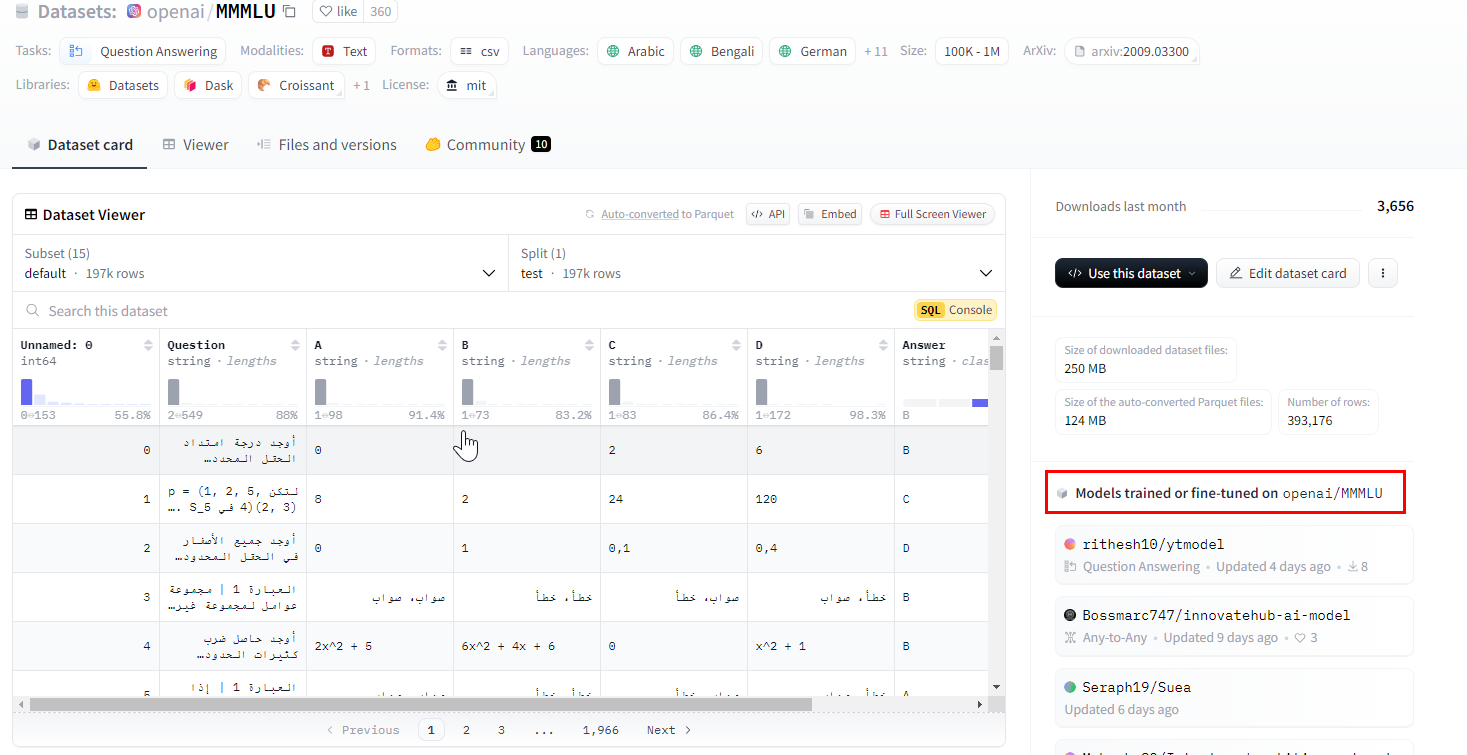

When you click a particular dataset, you can see its details and all the models trained on it. You can then check the model cards for performance benchmarks.

Try different reader models. For more guidance, see Models for Question Answering.

When choosing a model, remember that larger models usually perform better but are slower than smaller ones. You must decide on a balance between reader accuracy and search latency that you're comfortable with.

Fine-Tuning the Reader Model

If you find that none of the available models perform well enough, you can always fine-tune a model. To do that, you need to collect and annotate data. Our experience is that collecting feedback in Haystack Enterprise Platform doesn't boost the reader performance as much as annotated data.

Once you have all the data, fine-tune the best-performing reader models you tried in the previous steps with the data you collected.

Reducing Duplicate Answers

If your search returns duplicate answers coming from overlapping documents, try reducing the split_overlap value of DocumentSplitter to a value around or below 10.

Was this page helpful?