ReferencePredictor

Use this component in retrieval augmented generation (RAG) pipelines to predict references for the generated answer.

This component is deprecated. It will continue to work in your existing pipelines. You can use LLM-generated references instead. For more information, see Enable References for Generated Answers.

Basic Information

- Type:

deepset_cloud_custom_nodes.augmenters.reference_predictor.ReferencePredictor - Components it can connect with:

- Generators: A Generator sends the generated replies to

ReferencePredictorthat adds predicted references to each answer. AnswerBuilder:AnswerBuildercan receive the answers with references fromReferencePredictor.

- Generators: A Generator sends the generated replies to

Inputs

| Parameter | Type | Default | Description |

|---|---|---|---|

| answers | List[GeneratedAnswer] | The generated answers to which you want to add references. |

Outputs

| Parameter | Type | Default | Description |

|---|---|---|---|

| answers | List[GeneratedAnswer] | Generated answers with a metadata field _references added to each answer and containing a list of references this answer was based on. Each reference contains the following fields: - document_start_idx: The starting position of the reference in the document.- document_end_idx: The end position of the reference in the document.- answer_start_idx: The starting position of the reference in the answer.- answer_end_idx: The end position of the reference in the answer.- score: The score expressing the strength of the association between answer span and document reference span.- document_id: The ID of the referenced document.- document_position: A 1-based index of the referenced document among all documents passed in for prediction.- label: Label for the reference. |

Overview

ReferencePredictor shows references to documents on which the LLM's answer is based. Pipelines that contain ReferencePredictor return answers with references next to them. You can easily view the reference to check if the answer is based on it and ensure the model didn't hallucinate.

The default ReferencePredictor model only works for English data. For other languages, use DeepsetAnswerBuilder, and in the prompt, instruct the model to add references to the generated answers. For details, see Enable References for Generated Answers.

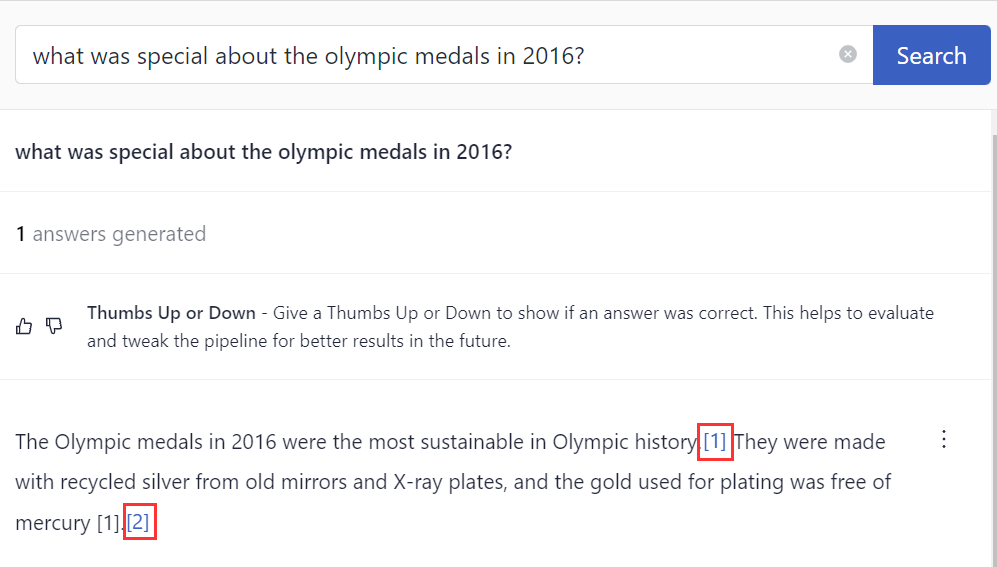

This is what the references look like in the interface:

ReferencePredictor adds a meta field _references to each answer containing a list of references. Each reference contains the

following fields:

- document_start_idx: Start position of the reference in the document string.

- document_end_idx: End position of the reference in the document string.

- answer_start_idx: Start position of the reference in the answer string.

- answer_end_idx: End position of the reference in the answer string.

- score: Score expressing the strength of the association between answer span and document reference span.

- document_id: ID of the referenced document.

- document_position: 1-based index of the referenced document among all documents passed in for prediction.

- label: Label for the reference.

Recommended settings

Reference Prediction Model

Reference prediction model is the model ReferencePredictor uses to compare the similarity of sentences between the answer and source documents. The default model is cross-encoder/ms-marco-MiniLM-L-6-v2.

Verifiability Model

You can indicate a model you want to use to verify if the generated answers need verification in the verifiability_model_name_or_path parameter. ReferencePredictor uses the tstadel/answer-classification-setfit-v2-binary model by default. We trained this model to reject answers that are noise, out of context, or information there was no answer found. It was trained on English data and works at the sentence level, meaning it verifies full sentences. If your data is in other languages, either provide your own model or set verifiability_model_name_or_path to null.

Splitting Rules

To make sure answers are split correctly, we recommend applying additional rules to the sentence-splitting tokenizer. To apply the rules, set use_split_rules to True.

Abbreviations

We recommend extending the number of abbreviations Punkt tokenizer detects to ensure better sentence splitting. You can do this by setting extend_abbreviations to True.

Usage Example

Using the Component in a Pipeline

This is an example of a query pipeline in which ReferencePredictor sends answers with references to AnswerBuilder.

components:

...

reference_predictor:

type: deepset_cloud_custom_nodes.augmenters.reference_predictor.ReferencePredictor

init_parameters:

use_split_rules: True

extend_abbreviations: True

answer_builder:

type: haystack.components.builders.answer_builder.AnswerBuilder

init_parameters: {} # In this example, we're using AnswerBuilder with default parameters

...

connections:

...

- sender: reference_predictor.answers

receiver: answer_builder.answers

Parameters

Init Parameters

These are the parameters you can configure in Pipeline Builder:

| Parameter | Type | Default | Description |

|---|---|---|---|

| model | str | cross-encoder/ms-marco-MiniLM-L-6-v2 | The name identifier of the model to be used on the hugging face hub or the path to a local model folder. The default model is: "cross-encoder" |

| revision | Optional[str] | None | The revision of the model to be used. |

| max_seq_len | int | 512 | The maximum number of tokens that a sequence should be truncated to before inference. |

| language | Language | en | The language of the data that you want to generate references for. The language is needed to apply the right sentence splitting rules. |

| device | Optional[ComponentDevice] | None | The device on which the model is loaded. If None, the default device is automatically selected. If a device/device map is specified in huggingface_pipeline_kwargs, it overrides this parameter. |

| batch_size | int | 16 | The batch size that should be used for inference. |

| answer_window_size | int | 1 | How many sentences of an answer should be packed into one span for inference. |

| document_window_size | int | 3 | How many sentences of a document should be packed into one span for inference. |

| token | Optional[Secret] | Secret.from_env_var('HF_API_TOKEN', strict=False) | The token to use as HTTP bearer authorization for remote files. |

| function_to_apply | str | sigmoid | What activation function to use on top of the logits. Default is "sigmoid". Available: - sigmoid - softmax - none |

| min_score_2_label_thresholds | Optional[Dict] | None | The minimum prediction score threshold for each corresponding label. |

| label_2_score_map | Optional[Dict] | None | If using a model with a multi label prediction head, pass in a dict mapping label names to a float value that will be used as score. |

| reference_threshold | Optional[int] | None | If using this component to generate references for answer spans, you can pass in a minimum score threshold that determines if a prediction should be included as reference or not. If no threshold is passed, the reference is chosen by picking the maximum score. |

| default_class | str | not_grounded | A fallback class that should be used if the predicted score doesn't match any threshold. |

| verifiability_model | Optional[str] | tstadel/answer-classification-setfit-v2-binary | The name identifier of the verifiability model to be used on the hugging face hub or the path to a local model folder. The default model is: "tstadel/answer-classification-setfit-v2-binary" |

| verifiability_revision | Optional[str] | None | The revision of the verifiability model to be used. |

| verifiability_batch_size | int | 32 | The batch size that should be used for verifiability inference. |

| needs_verification_classes | List[str] | None | None | The class names to be used to determine if a sentence needs verification. Defaults to ["needs_verification"]. |

| use_split_rules | bool | False | If True, additional rules for better splitting answers are applied to the sentence splitting tokenizer. |

| extend_abbreviations | bool | False | If True, the abbreviations used by NLTK's PunktTokenizer are extended by a list of curated abbreviations if available. If False, the default abbreviations are used. |

| answer_stride | int | 1 | The stride size for answer window. |

| document_stride | int | 3 | The stride size for document window. |

| model_kwargs | Optional[Dict] | None | Additional keyword arguments for the model. |

| verifiability_model_kwargs | Optional[Dict] | None | Additional keyword arguments for the verifiability model. |

Run Method Parameters

These are the parameters you can configure for the component's run() method. This means you can pass these parameters at query time through the API, in Playground, or when running a job. For details, see Modify Pipeline Parameters at Query Time.

| Parameter | Type | Default | Description |

|---|---|---|---|

| answers | List[GeneratedAnswer] | Replies returned by the Generator. |

Was this page helpful?