DeepsetCSVRowsToDocumentsConverter

Read CSV files from various sources and convert each row into Haystack documents.

Basic Information

- Type:

deepset_cloud_custom_nodes.converters.csv_rows_to_documents.DeepsetCSVRowsToDocumentsConverter - Components it can connect with:

FileTypeRouter:DeepsetCSVRowsToDocumentsConvertercan receive CSV files fromFileTypeRouter.DocumentJoiner:DeepsetCSVRowsToDocumentsConvertercan send converted documents toDocumentJoiner. This is useful if you have other converters in your pipeline and want to join their output withDeepsetCSVRowsToDocumentsConverter's output before sending it further down the pipeline.

Inputs

| Parameter | Type | Default | Description |

|---|---|---|---|

| sources | List[Union[str, Path, ByteStream]] | List of CSV file paths (str or Path) or ByteStream objects. | |

| meta | Optional[Union[Dict[str, Any], List[Dict[str, Any]]]] | None | Optional metadata to attach to the documents. Can be a single dict or a list of dicts. |

Outputs

| Parameter | Type | Default | Description |

|---|---|---|---|

| documents | List[Document] | A dictionary containing a list of Haystack Documents. |

Overview

DeepsetCSVRowsToDocumentsConverter reads a CSV file and converts each row into a Document object, using the content column as the document's main content. You can specify a different column for content using the content_column parameter.

All other columns are added to the document’s metadata.

Usage Example

Using the Component in a Pipeline

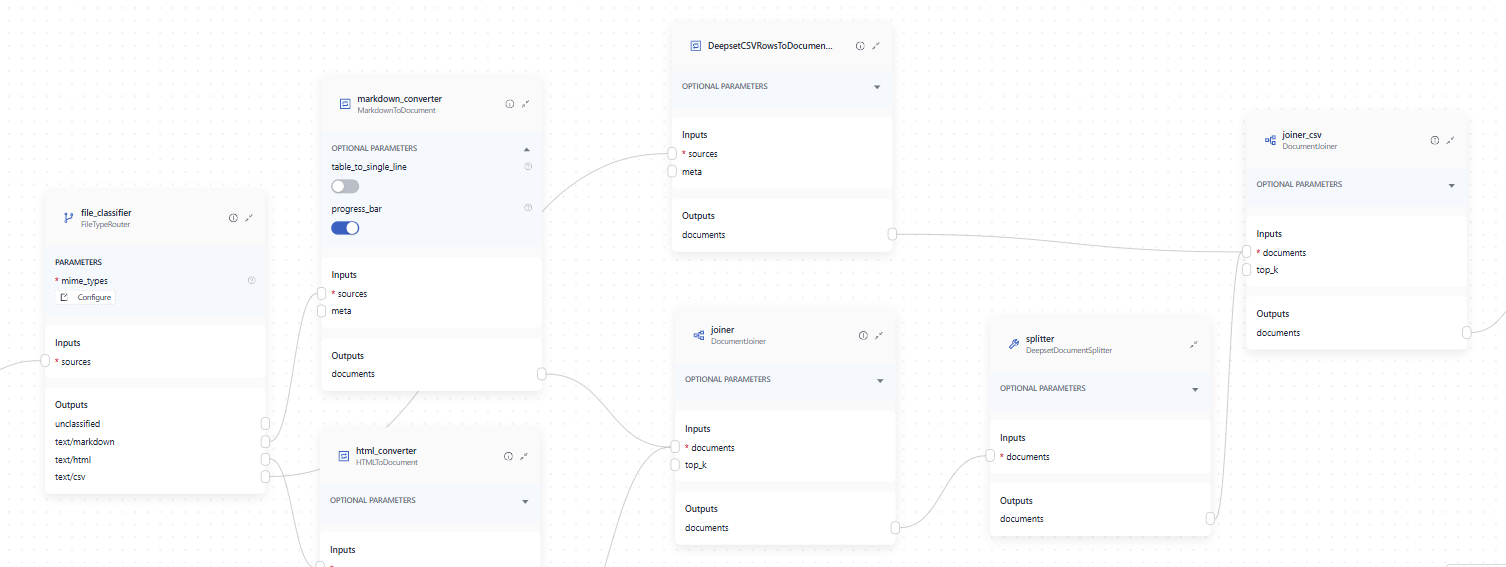

This is an example of an index that processes multiple file types. It starts with FilesInput followed by file_classifier (FileTypeRouter) which classifies files by type and sends them to an appropriate converter.

DeepsetCSVRowsToDocumentsConverter receives CSV files from file_classifier (FileTypeRouter) and outputs a list of pre-chunked documents. Since these documents are already chunked, they bypass the splitter (DeepsetDocumentSplitter) and go directly to joiner_csv (DocumentJoiner). The DocumentJoiner combines documents from both the DeepsetCSVRowsToDocumentsConverter and the splitter (DeepsetDocumentSplitter) into a single list. This joined list is then sent to the document_embedder (SentenceTransformersDocumentEmbedder) and finally to the writer (DocumentWriter), which writes them into the document store.

YAML configuration:

components:

file_classifier:

type: haystack.components.routers.file_type_router.FileTypeRouter

init_parameters:

mime_types:

- text/markdown

- text/html

- text/csv

markdown_converter:

type: haystack.components.converters.markdown.MarkdownToDocument

init_parameters: {}

html_converter:

type: haystack.components.converters.html.HTMLToDocument

init_parameters:

extraction_kwargs:

output_format: txt

target_language: null

include_tables: true

include_links: false

joiner:

type: haystack.components.joiners.document_joiner.DocumentJoiner

init_parameters:

join_mode: concatenate

joiner_csv:

type: haystack.components.joiners.document_joiner.DocumentJoiner

init_parameters:

join_mode: concatenate

splitter:

type: deepset_cloud_custom_nodes.preprocessors.document_splitter.DeepsetDocumentSplitter

init_parameters:

split_by: word

split_length: 250

split_overlap: 30

respect_sentence_boundary: true

language: en

document_embedder:

type: haystack.components.embedders.sentence_transformers_document_embedder.SentenceTransformersDocumentEmbedder

init_parameters:

model: intfloat/e5-base-v2

writer:

type: haystack.components.writers.document_writer.DocumentWriter

init_parameters:

document_store:

type: haystack_integrations.document_stores.opensearch.document_store.OpenSearchDocumentStore

init_parameters:

embedding_dim: 768

similarity: cosine

policy: OVERWRITE

DeepsetCSVRowsToDocumentsConverter:

type: deepset_cloud_custom_nodes.converters.csv_rows_to_documents.DeepsetCSVRowsToDocumentsConverter

init_parameters:

content_column: content

encoding: utf-8

connections:

- sender: file_classifier.text/markdown

receiver: markdown_converter.sources

- sender: file_classifier.text/html

receiver: html_converter.sources

- sender: markdown_converter.documents

receiver: joiner.documents

- sender: html_converter.documents

receiver: joiner.documents

- sender: joiner.documents

receiver: splitter.documents

- sender: splitter.documents

receiver: joiner_csv.documents

- sender: joiner_csv.documents

receiver: document_embedder.documents

- sender: document_embedder.documents

receiver: writer.documents

- sender: file_classifier.text/csv

receiver: DeepsetCSVRowsToDocumentsConverter.sources

- sender: DeepsetCSVRowsToDocumentsConverter.documents

receiver: joiner_csv.documents

max_loops_allowed: 100

metadata: {}

inputs:

files:

- file_classifier.sources

Parameters

Init Parameters

These are the parameters you can configure in Pipeline Builder:

| Parameter | Type | Default | Description |

|---|---|---|---|

| content_column | str | content | Name of the column to use as content when processing the CSV file. |

| encoding | str | utf-8 | Encoding type to use when reading the files. |

Run Method Parameters

These are the parameters you can configure for the component's run() method. This means you can pass these parameters at query time through the API, in Playground, or when running a job. For details, see Modify Pipeline Parameters at Query Time.

| Parameter | Type | Default | Description |

|---|---|---|---|

| sources | List[Union[str, Path, ByteStream]] | List of CSV file paths (str or Path) or ByteStream objects. | |

| meta | Optional[Union[Dict[str, Any], List[Dict[str, Any]]]] | None | Optional metadata to attach to the documents. Can be a single dict or a list of dicts. |

Was this page helpful?