Use NVIDIA Models

Use models from NVIDIA in your pipelines.

About This Task

You can use self-hosted models from the NVIDIA API catalog or models deployed on NVIDIA NIM.

Prerequisites

You need an active NVIDIA API key. For details on how to obtain it, see NVIDIA documentation.

Use NVIDIA Models

First, connect Haystack Enterprise Platform to NVIDIA through the Integrations page. You can set up the connection for a single workspace or for the whole organization:

Add Workspace-Level Integration

- Click your profile icon and choose Settings.

- Go to Workspace>Integrations.

- Find the provider you want to connect and click Connect next to them.

- Enter the API key and any other required details.

- Click Connect. You can use this integration in pipelines and indexes in the current workspace.

Add Organization-Level Integration

- Click your profile icon and choose Settings.

- Go to Organization>Integrations.

- Find the provider you want to connect and click Connect next to them.

- Enter the API key and any other required details.

- Click Connect. You can use this integration in pipelines and indexes in all workspaces in the current organization.

Then, add a component that uses a model hosted on NVIDIA to your pipeline. Here are the components by the model type they use:

-

Embedding models:

NvidiaTextEmbedder: Uses an embedding model to calculate vector representations of text. Often used in query pipelines to embed the query string and send it to an embedding retriever.NvidiaDocumentEmbedder: Uses an embedding model to calculate embeddings of documents. Often used in indexes to embed documents and send them to DocumentWriter.Embedding Models in Query Pipelines and Indexes

The embedding model you use to embed documents in your indexing pipeline must be the same as the embedding model you use to embed the query in your query pipeline.

This means the embedders for your indexing and query pipelines must match. For example, if you use

CohereDocumentEmbedderto embed your documents, you should useCohereTextEmbedderwith the same model to embed your queries.

-

LLMs:

NvidiaGenerator: Generates text using models from NVIDIA. Often used in RAG pipelines.

Usage Examples

This is a YAML example of how to use embedding models and an LLM hosted on NVIDIA in an index and a query pipeline (each in a separate tab):

- Query Pipeline

- Index

components:

...

query_embedder:

type: haystack_integrations.components.embedders.nvidia.text_embedder.NvidiaTextEmbedder

init_parameters:

api_url: "https://ai.api.nvidia.com/v1/retrieval/nvidia" # custom API URL for NVIDIA NIM.

model: "NV-Embed-QA" # the model to use

retriever:

type: haystack_integrations.components.retrievers.opensearch.embedding_retriever.OpenSearchEmbeddingRetriever

init_parameters:

document_store:

init_parameters:

use_ssl: True

verify_certs: False

http_auth:

- "${OPENSEARCH_USER}"

- "${OPENSEARCH_PASSWORD}"

type: haystack_integrations.document_stores.opensearch.document_store.OpenSearchDocumentStore

top_k: 20

prompt_builder:

type: haystack.components.builders.prompt_builder.PromptBuilder

init_parameters:

template: |-

You are a technical expert.

You answer questions truthfully based on provided documents.

For each document check whether it is related to the question.

Only use documents that are related to the question to answer it.

Ignore documents that are not related to the question.

If the answer exists in several documents, summarize them.

Only answer based on the documents provided. Don't make things up.

If the documents can't answer the question or you are unsure say: 'The answer can't be found in the text'.

These are the documents:

{% for document in documents %}

Document[{{ loop.index }}]:

{{ document.content }}

{% endfor %}

Question: {{question}}

Answer:

generator:

type: haystack_integrations.components.generators.nvidia.generator.NvidiaGenerator

init_parameters:

model: "meta/llama3-70b-instruct" # here, pass the name of the model to use

api_url: "https://integrate.api.nvidia.com/v1"

model_arguments:

temperature: 0.2

top_p: 0.7

max_tokens: 1024

answer_builder:

init_parameters: {}

type: haystack.components.builders.answer_builder.AnswerBuilder

...

connections:

...

- sender: query_embedder.embedding

receiver: retriever.query_embedding

- sender: retriever.documents

receiver: prompt_builder.documents

- sender: prompt_builder.prompt

receiver: generator.prompt

- sender: generator.replies

receiver: answer_builder.replies

...

components:

...

splitter:

type: haystack.components.preprocessors.document_splitter.DocumentSplitter

init_parameters:

split_by: word

split_length: 250

split_overlap: 30

document_embedder:

type: haystack_integrations.components.embedders.nvidia.document_embedder.NvidiaDocumentEmbedder

init_parameters:

api_url: "https://ai.api.nvidia.com/v1/retrieval/nvidia" # A required custom NVIDIA API URL for NVIDIA NIM

model: "NV-Embed-QA" # the model to use

writer:

type: haystack.components.writers.document_writer.DocumentWriter

init_parameters:

document_store:

type: haystack_integrations.document_stores.opensearch.document_store.OpenSearchDocumentStore

init_parameters:

embedding_dim: 768

similarity: cosine

policy: OVERWRITE

connections: # Defines how the components are connected

...

- sender: splitter.documents

receiver: document_embedder.documents

- sender: document_embedder.documents

receiver: writer.documents

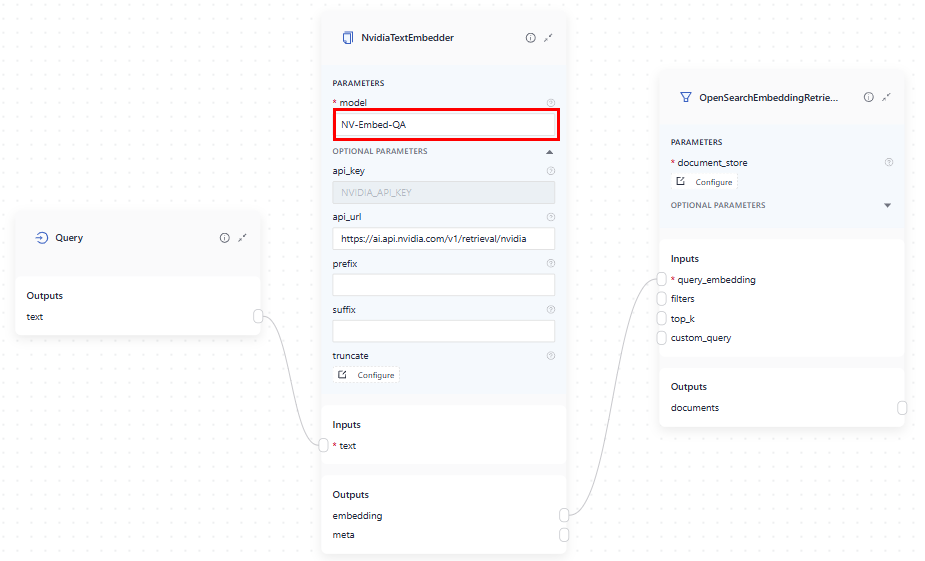

This is how to connect the components of the query pipeline in Pipeline Builder:

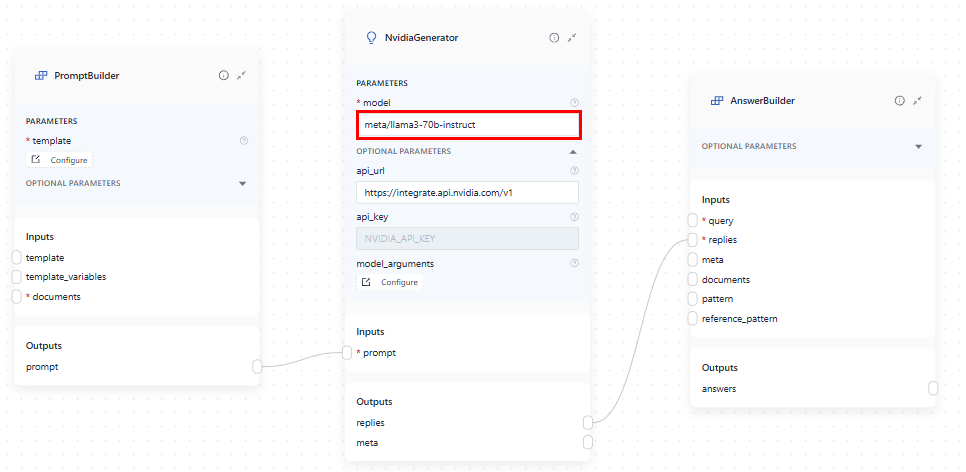

Then the generator:

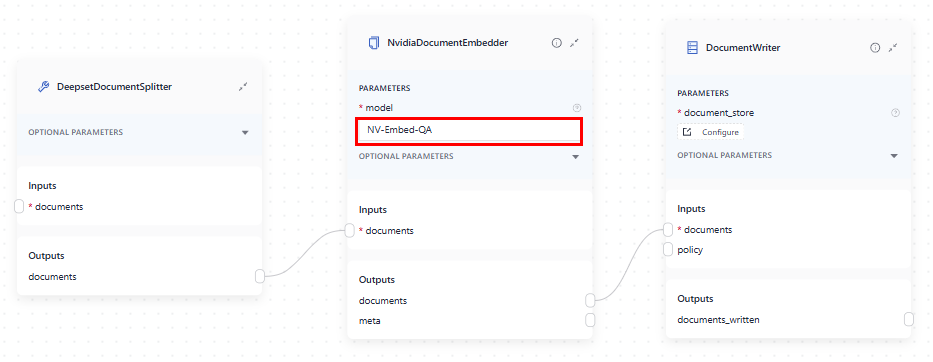

In indexes, the Document Embedder should use the same model as the Text Embedder in the query pipeline:

Was this page helpful?