DeepsetFileToBase64Image

Convert documents sourced from image files (PNG, JPG, JPEG, GIF) to base64-encoded images.

This component is deprecated. It will continue to work in your existing pipelines. You can replace it with the ImageFileToImageContent component.

Basic Information

- Type:

deepset_cloud_custom_nodes.converters.file_to_image.DeepsetFileToBase64Image - Components it can connect with:

- Any component that outputs a list of

Documentobjects sourced from JPG, JPEG, PNG, or GIF. - Any component that accepts a list of

Base64Imageobjects as input DeepsetFileDownloader:DeepsetFileToBase64Imagecan receive documents fromDeepsetFileDownloader.DeepsetAzureOpenAIVisionGenerator: This Generator can receive Base64Image objects to run visual question answering on them.

- Any component that outputs a list of

Inputs

| Parameter | Type | Default | Description |

|---|---|---|---|

| documents | List[Document] | None | None |

| sources | List[Union[str, Path, ByteStream]] | None | None |

Outputs

| Parameter | Type | Default | Description |

|---|---|---|---|

| documents | List[Document] | A list of text documents and a corresponding list of base64-encoded images. | |

| base64_images | List[Base64Image] | A list of text documents and a corresponding list of base64-encoded images. |

Overview

DeepsetFileToBase64Image is a converter used in visual question answering pipelines to extract base mages from the downloaded images. These images are then sent to a visual Generator that can process them. It converts documents accompanied by metadata containing the file_path pointing to the location of the image.

Converting documents doesn't happen if:

- The

file_pathdoesn't exist in the metadata. - The file path doesn't start with the expected root path.

- The file path doesn't end with

.png,gif,jpg, orjpeg.

Usage Example

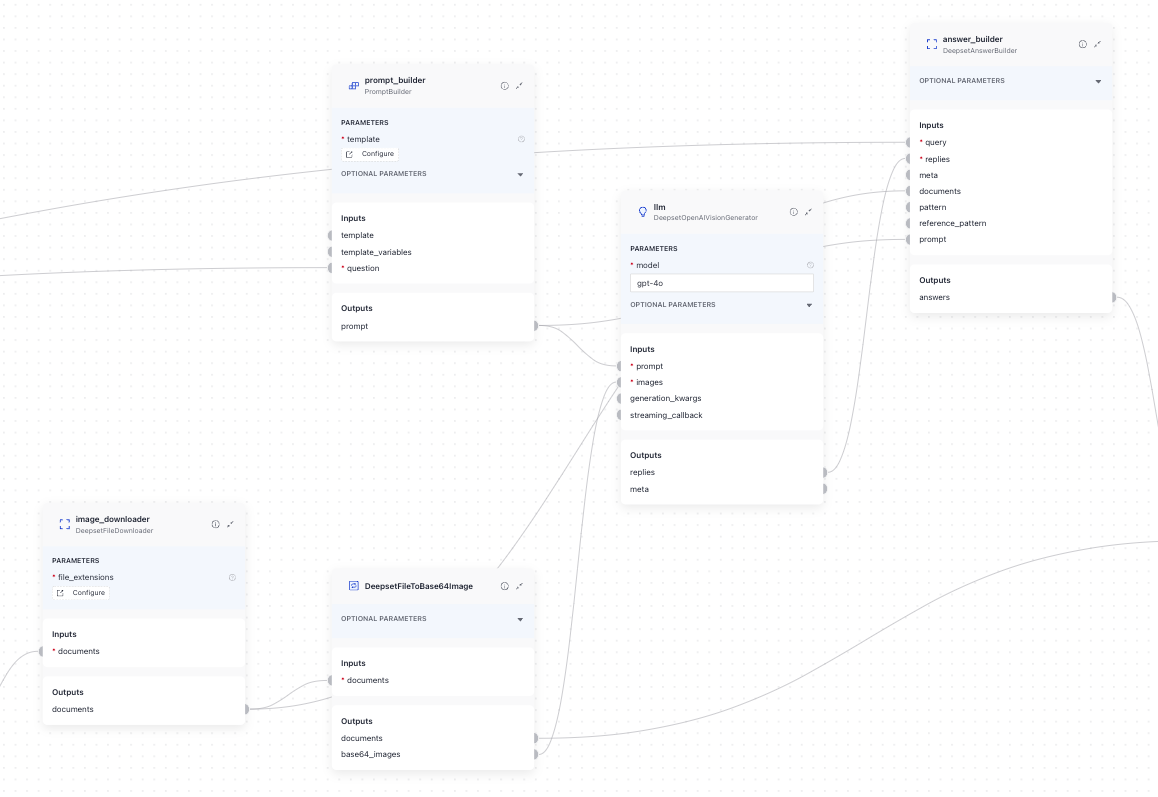

Using the Component in a Pipeline

This component is used in our visual question answering templates, where it receives documents from DeepsetFileDownloader and sends them to DeepsetAzureOpenAIVisionGenerator.

This is how you connect the components in Builder:

And here's the pipeline YAML:

components:

bm25_retriever: # Selects the most similar documents from the document store

type: haystack_integrations.components.retrievers.opensearch.bm25_retriever.OpenSearchBM25Retriever

init_parameters:

document_store:

type: haystack_integrations.document_stores.opensearch.document_store.OpenSearchDocumentStore

init_parameters:

embedding_dim: 1024

top_k: 20 # The number of results to return

fuzziness: 0

query_embedder:

type: haystack.components.embedders.sentence_transformers_text_embedder.SentenceTransformersTextEmbedder

init_parameters:

normalize_embeddings: true

model: "BAAI/bge-m3"

tokenizer_kwargs:

model_max_length: 1024

embedding_retriever: # Selects the most similar documents from the document store

type: haystack_integrations.components.retrievers.opensearch.embedding_retriever.OpenSearchEmbeddingRetriever

init_parameters:

document_store:

type: haystack_integrations.document_stores.opensearch.document_store.OpenSearchDocumentStore

init_parameters:

embedding_dim: 1024

top_k: 20 # The number of results to return

document_joiner:

type: haystack.components.joiners.document_joiner.DocumentJoiner

init_parameters:

join_mode: concatenate

ranker:

type: haystack.components.rankers.transformers_similarity.TransformersSimilarityRanker

init_parameters:

model: "BAAI/bge-reranker-v2-m3"

top_k: 5

model_kwargs:

torch_dtype: "torch.float16"

tokenizer_kwargs:

model_max_length: 1024

meta_field_grouping_ranker:

type: haystack.components.rankers.meta_field_grouping_ranker.MetaFieldGroupingRanker

init_parameters:

group_by: file_id

subgroup_by:

sort_docs_by: split_id

image_downloader:

type: deepset_cloud_custom_nodes.augmenters.deepset_file_downloader.DeepsetFileDownloader

init_parameters:

file_extensions:

- ".png"

- .jpeg

- .jpg

prompt_builder:

type: haystack.components.builders.prompt_builder.PromptBuilder

init_parameters:

template: |-

Answer the question briefly and precisely based on the pictures.

Give reasons for your answer.

When answering the question only provide references within the answer text.

Only use references in the form [NUMBER OF IMAGE] if you are using information from a image.

For example, if the first image is used in the answer add [1] and if the second image is used then use [2], etc.

Never name the images, but always enter a number in square brackets as a reference.

Question: {{ question }}

Answer:

required_variables: "*"

llm:

type: deepset_cloud_custom_nodes.generators.openai_vision.DeepsetOpenAIVisionGenerator

init_parameters:

api_key: {"type": "env_var", "env_vars": ["OPENAI_API_KEY"], "strict": false}

model: "gpt-4o"

generation_kwargs:

max_tokens: 650

temperature: 0

seed: 0

answer_builder:

type: deepset_cloud_custom_nodes.augmenters.deepset_answer_builder.DeepsetAnswerBuilder

init_parameters:

reference_pattern: acm

DeepsetFileToBase64Image:

type: deepset_cloud_custom_nodes.converters.file_to_image.DeepsetFileToBase64Image

init_parameters:

detail: auto

connections: # Defines how the components are connected

- sender: bm25_retriever.documents

receiver: document_joiner.documents

- sender: query_embedder.embedding

receiver: embedding_retriever.query_embedding

- sender: embedding_retriever.documents

receiver: document_joiner.documents

- sender: document_joiner.documents

receiver: ranker.documents

- sender: ranker.documents

receiver: meta_field_grouping_ranker.documents

- sender: meta_field_grouping_ranker.documents

receiver: image_downloader.documents

- sender: prompt_builder.prompt

receiver: llm.prompt

- sender: image_downloader.documents

receiver: answer_builder.documents

- sender: prompt_builder.prompt

receiver: answer_builder.prompt

- sender: llm.replies

receiver: answer_builder.replies

- sender: DeepsetFileToBase64Image.base64_images

receiver: llm.images

- sender: image_downloader.documents

receiver: DeepsetFileToBase64Image.documents

inputs: # Define the inputs for your pipeline

query: # These components will receive the query as input

- "bm25_retriever.query"

- "query_embedder.text"

- "ranker.query"

- "prompt_builder.question"

- "answer_builder.query"

filters: # These components will receive a potential query filter as input

- "bm25_retriever.filters"

- "embedding_retriever.filters"

outputs: # Defines the output of your pipeline

documents: "DeepsetFileToBase64Image.documents" # The output of the pipeline is the retrieved documents

answers: "answer_builder.answers" # The output of the pipeline is the generated answers

max_runs_per_component: 100

metadata: {}

Parameters

Init Parameters

These are the parameters you can configure in Pipeline Builder:

| Parameter | Type | Default | Description |

|---|---|---|---|

| detail | Literal['auto', 'low', 'high'] | auto | Control over how the model processes the image and generates its textual understanding. By default, the model uses the auto setting which checks the image input size and decides if it should use the low or high setting. See OpenAI documentation |

Run Method Parameters

These are the parameters you can configure for the component's run() method. This means you can pass these parameters at query time through the API, in Playground, or when running a job. For details, see Modify Pipeline Parameters at Query Time.

| Parameter | Type | Default | Description |

|---|---|---|---|

| documents | List[Document] | None | A list of documents with image information in their metadata. The expected metadata is: meta = {"file_path": str} If this metadata is not present, the document is skipped and the component shows a warning message. |

| sources | List[Union[str, Path, ByteStream]] | None | A list of sources as path or ByteStream objects. |

Was this page helpful?