Trace with Langfuse

Monitor your Haystack Platform pipelines with Langfuse.

About This Task

Langfuse is a powerful tool for observability and tracing in complex AI workflows. It captures detailed traces of your pipeline operations, making it easier to debug, monitor, and improve your applications.

The Haystack Enterprise Platform integrates with Langfuse using the LangfuseConnector. To get started, connect the platform to Langfuse with your API key. Then, simply add the LangfuseConnector to your pipelines to start collecting and sending traces to Langfuse.

Prerequisites

- You need the Langfuse public and secret API keys. You can obtain them from your Langfuse project settings. You must create new keys as the secret key can only be viewed and copied once, during creation.

- Create two secrets:

- One for the Langfuse secret key. Call this secret

LANGFUSE_SECRET_KEY. - One for the Langfuse public key. Call this secret

LANGFUSE_PUBLIC_KEY.

- One for the Langfuse secret key. Call this secret

For more information on secrets, see Add Secrets and Secrets and Integrations.

Use Langfuse

- Connect Haystack Enterprise Platform to Langfuse by creating two Langfuse secrets:

- In Haystack Enterprise Platform, click your profile icon in the top right corner and choose Settings.

- Depending on the scope of the secrets, go to Workspace or Organization secrets.

- Click Create Secret.

- Copy the secret key from your Langfuse project and paste it into the Value field.

- Type

LANGFUSE_SECRET_KEYas the secret key and save the secret. - Click Create Secret.

- Copy the public key from your Langfuse project and paste it into the Value field.

- Type

LANGFUSE_PUBLIC_KEYas the secret key and save the secret.

- Add the

LangfuseConnectorcomponent to the pipeline you want to trace but do not connect it to any other component. - Set the

nameparameter inLangfuseConnectorto your pipeline name and save the pipeline.

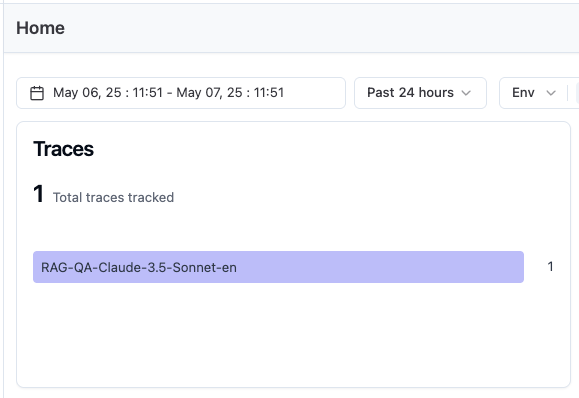

When you run a query with this pipeline, its traces will appear in your Langfuse project under Traces.

Example

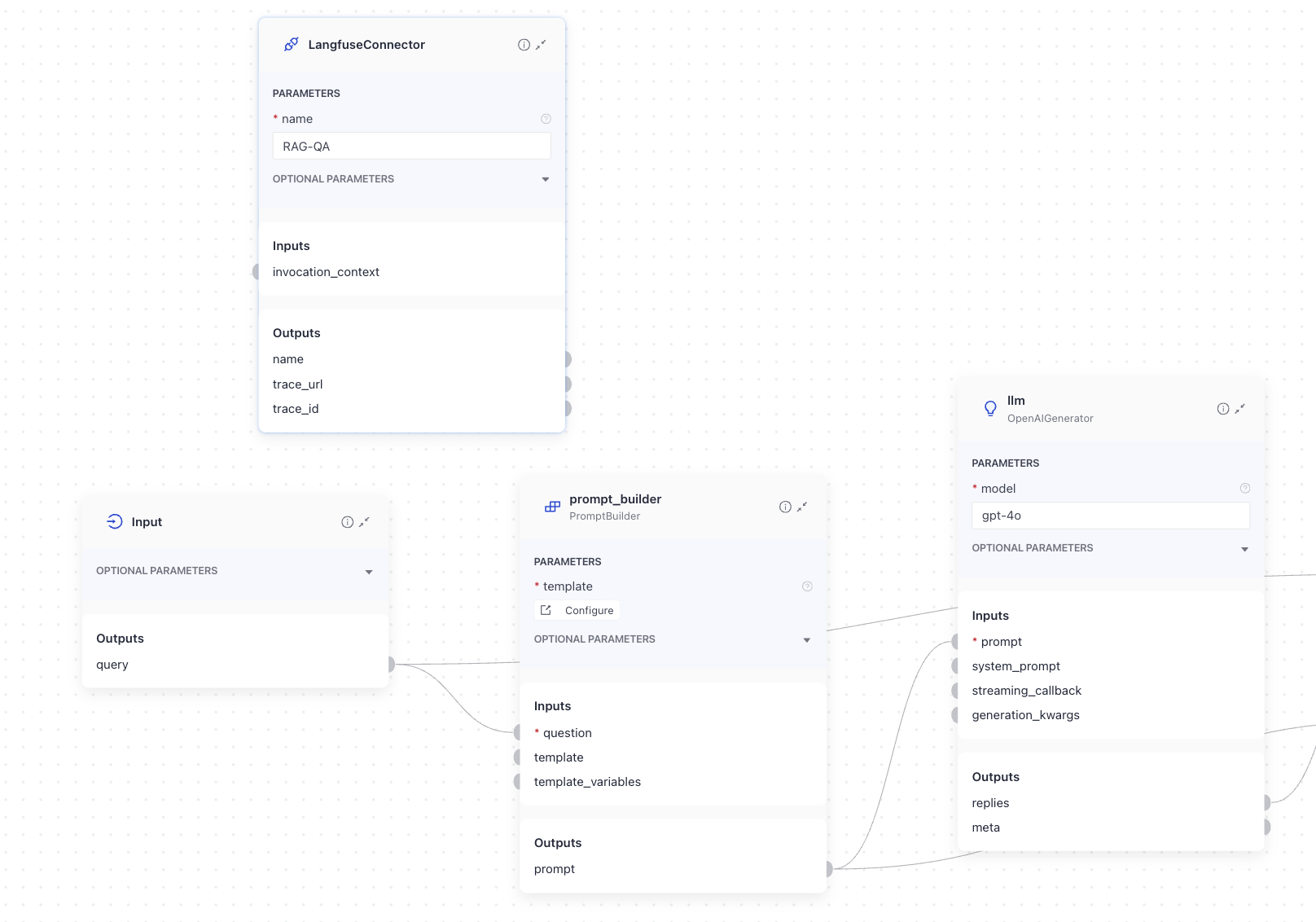

This is an example of a RAG pipeline with Langfuse tracing enabled. LangfuseConnector is in the pipeline but it's not connected to any other component:

YAML configuration

components:

bm25_retriever: # Selects the most similar documents from the document store

type: haystack_integrations.components.retrievers.opensearch.bm25_retriever.OpenSearchBM25Retriever

init_parameters:

document_store:

type: haystack_integrations.document_stores.opensearch.document_store.OpenSearchDocumentStore

init_parameters:

hosts:

index: 'Standard-Index-English'

max_chunk_bytes: 104857600

embedding_dim: 768

return_embedding: false

method:

mappings:

settings:

create_index: true

http_auth:

use_ssl:

verify_certs:

timeout:

top_k: 20 # The number of results to return

fuzziness: 0

query_embedder:

type: deepset_cloud_custom_nodes.embedders.nvidia.text_embedder.DeepsetNvidiaTextEmbedder

init_parameters:

normalize_embeddings: true

model: intfloat/e5-base-v2

embedding_retriever: # Selects the most similar documents from the document store

type: haystack_integrations.components.retrievers.opensearch.embedding_retriever.OpenSearchEmbeddingRetriever

init_parameters:

document_store:

type: haystack_integrations.document_stores.opensearch.document_store.OpenSearchDocumentStore

init_parameters:

hosts:

index: 'Standard-Index-English'

max_chunk_bytes: 104857600

embedding_dim: 768

return_embedding: false

method:

mappings:

settings:

create_index: true

http_auth:

use_ssl:

verify_certs:

timeout:

top_k: 20 # The number of results to return

document_joiner:

type: haystack.components.joiners.document_joiner.DocumentJoiner

init_parameters:

join_mode: concatenate

ranker:

type: deepset_cloud_custom_nodes.rankers.nvidia.ranker.DeepsetNvidiaRanker

init_parameters:

model: intfloat/simlm-msmarco-reranker

top_k: 8

meta_field_grouping_ranker:

type: haystack.components.rankers.meta_field_grouping_ranker.MetaFieldGroupingRanker

init_parameters:

group_by: file_id

subgroup_by:

sort_docs_by: split_id

llm:

type: haystack_integrations.components.generators.amazon_bedrock.chat.chat_generator.AmazonBedrockChatGenerator

init_parameters:

model: anthropic.claude-3-5-sonnet-20241022-v2:0

aws_region_name: us-west-2

max_length: 650

temperature: 0

answer_builder:

type: deepset_cloud_custom_nodes.augmenters.deepset_answer_builder.DeepsetAnswerBuilder

init_parameters:

reference_pattern: acm

LangfuseConnector:

type: haystack_integrations.components.connectors.langfuse.langfuse_connector.LangfuseConnector

init_parameters:

name: RAG-QA-Claude-3.5-Sonnet-en

public: false

public_key:

type: env_var

env_vars:

- LANGFUSE_PUBLIC_KEY

strict: false

secret_key:

type: env_var

env_vars:

- LANGFUSE_SECRET_KEY

strict: false

httpx_client:

span_handler:

ChatPromptBuilder:

type: haystack.components.builders.chat_prompt_builder.ChatPromptBuilder

init_parameters:

template: "You are a technical expert.\nYou answer questions truthfully based on provided documents.\nIf the answer exists in several documents, summarize them.\nIgnore documents that don't contain the answer to the question.\nOnly answer based on the documents provided. Don't make things up.\nIf no information related to the question can be found in the document, say so.\nAlways use references in the form [NUMBER OF DOCUMENT] when using information from a document, e.g. [3] for Document [3] .\nNever name the documents, only enter a number in square brackets as a reference.\nThe reference must only refer to the number that comes in square brackets after the document.\nOtherwise, do not use brackets in your answer and reference ONLY the number of the document without mentioning the word document.\n\nThese are the documents:\n{%- if documents|length > 0 %}\n{%- for document in documents %}\nDocument [{{ loop.index }}] :\nName of Source File: {{ document.meta.file_name }}\n{{ document.content }}\n{% endfor -%}\n{%- else %}\nNo relevant documents found.\nRespond with \"Sorry, no matching documents were found, please adjust the filters or try a different question.\"\n{% endif %}\n\nQuestion: {{ question }}\nAnswer:"

required_variables:

variables:

connections: # Defines how the components are connected

- sender: bm25_retriever.documents

receiver: document_joiner.documents

- sender: query_embedder.embedding

receiver: embedding_retriever.query_embedding

- sender: embedding_retriever.documents

receiver: document_joiner.documents

- sender: document_joiner.documents

receiver: ranker.documents

- sender: ranker.documents

receiver: meta_field_grouping_ranker.documents

- sender: meta_field_grouping_ranker.documents

receiver: answer_builder.documents

- sender: llm.replies

receiver: answer_builder.replies

- sender: meta_field_grouping_ranker.documents

receiver: ChatPromptBuilder.documents

- sender: ChatPromptBuilder.prompt

receiver: llm.messages

inputs: # Define the inputs for your pipeline

query: # These components will receive the query as input

- "bm25_retriever.query"

- "query_embedder.text"

- "ranker.query"

- "answer_builder.query"

- "ChatPromptBuilder.question"

filters: # These components will receive a potential query filter as input

- "bm25_retriever.filters"

- "embedding_retriever.filters"

outputs: # Defines the output of your pipeline

documents: "meta_field_grouping_ranker.documents" # The output of the pipeline is the retrieved documents

answers: "answer_builder.answers" # The output of the pipeline is the generated answers

max_runs_per_component: 100

metadata: {}

Was this page helpful?